- 1. Administering and Maintaining the oVirt Environment

- 1.1. Global Configuration

- 1.2. Dashboard

- 1.3. Searches

- 1.3.1. Performing Searches in oVirt

- 1.3.2. Search Syntax and Examples

- 1.3.3. Search Auto-Completion

- 1.3.4. Search Result Type Options

- 1.3.5. Search Criteria

- 1.3.6. Search: Multiple Criteria and Wildcards

- 1.3.7. Search: Determining Search Order

- 1.3.8. Searching for Data Centers

- 1.3.9. Searching for Clusters

- 1.3.10. Searching for Hosts

- 1.3.11. Searching for Networks

- 1.3.12. Searching for Storage

- 1.3.13. Searching for Disks

- 1.3.14. Searching for Volumes

- 1.3.15. Searching for Virtual Machines

- 1.3.16. Searching for Pools

- 1.3.17. Searching for Templates

- 1.3.18. Searching for Users

- 1.3.19. Searching for Events

- 1.4. Bookmarks

- 1.5. Tags

- 2. Administering the Resources

- 2.1. Quality of Service

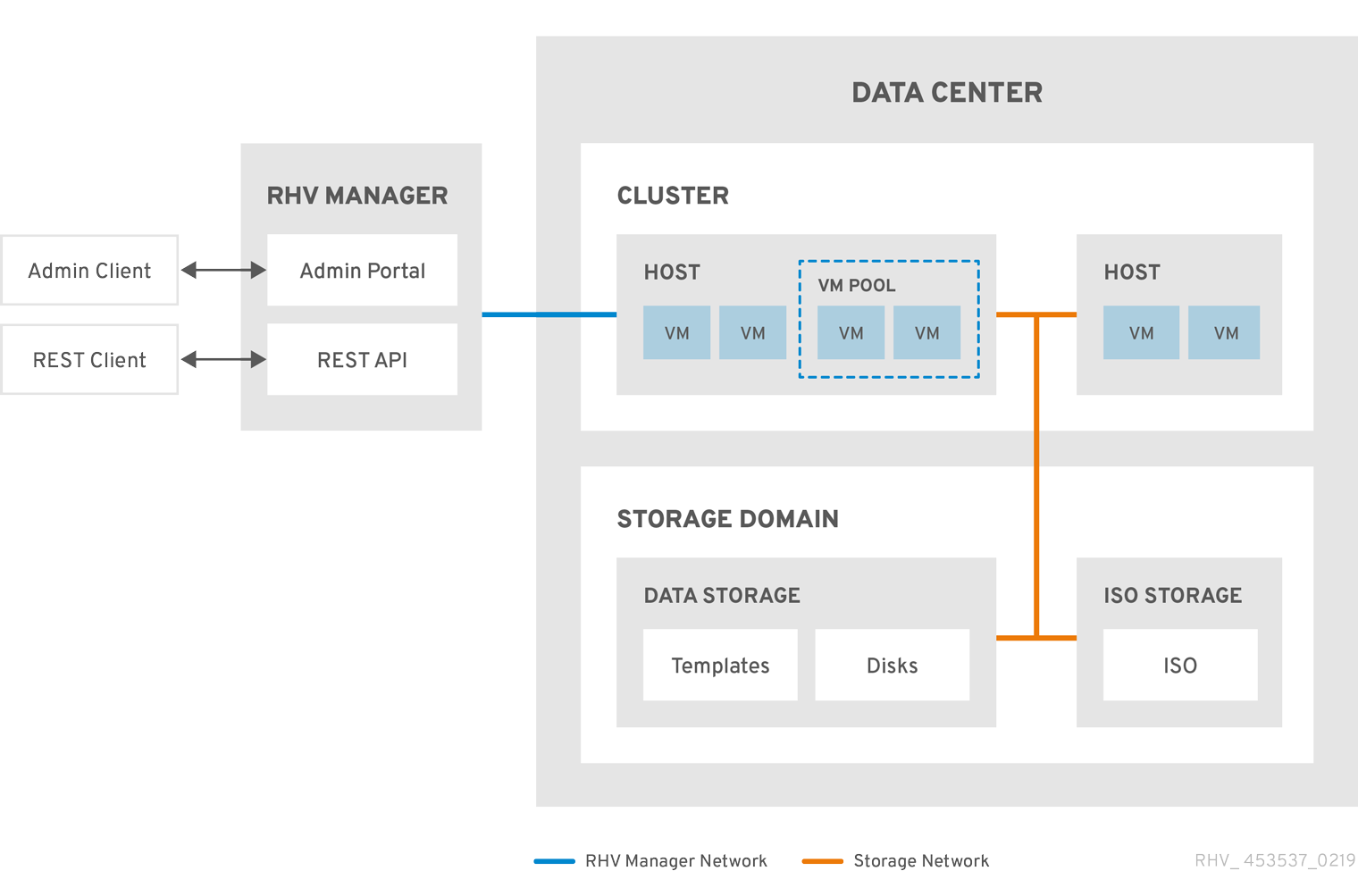

- 2.2. Data Centers

- 2.3. Clusters

- 2.4. Logical Networks

- 2.5. Hosts

- 2.6. Storage

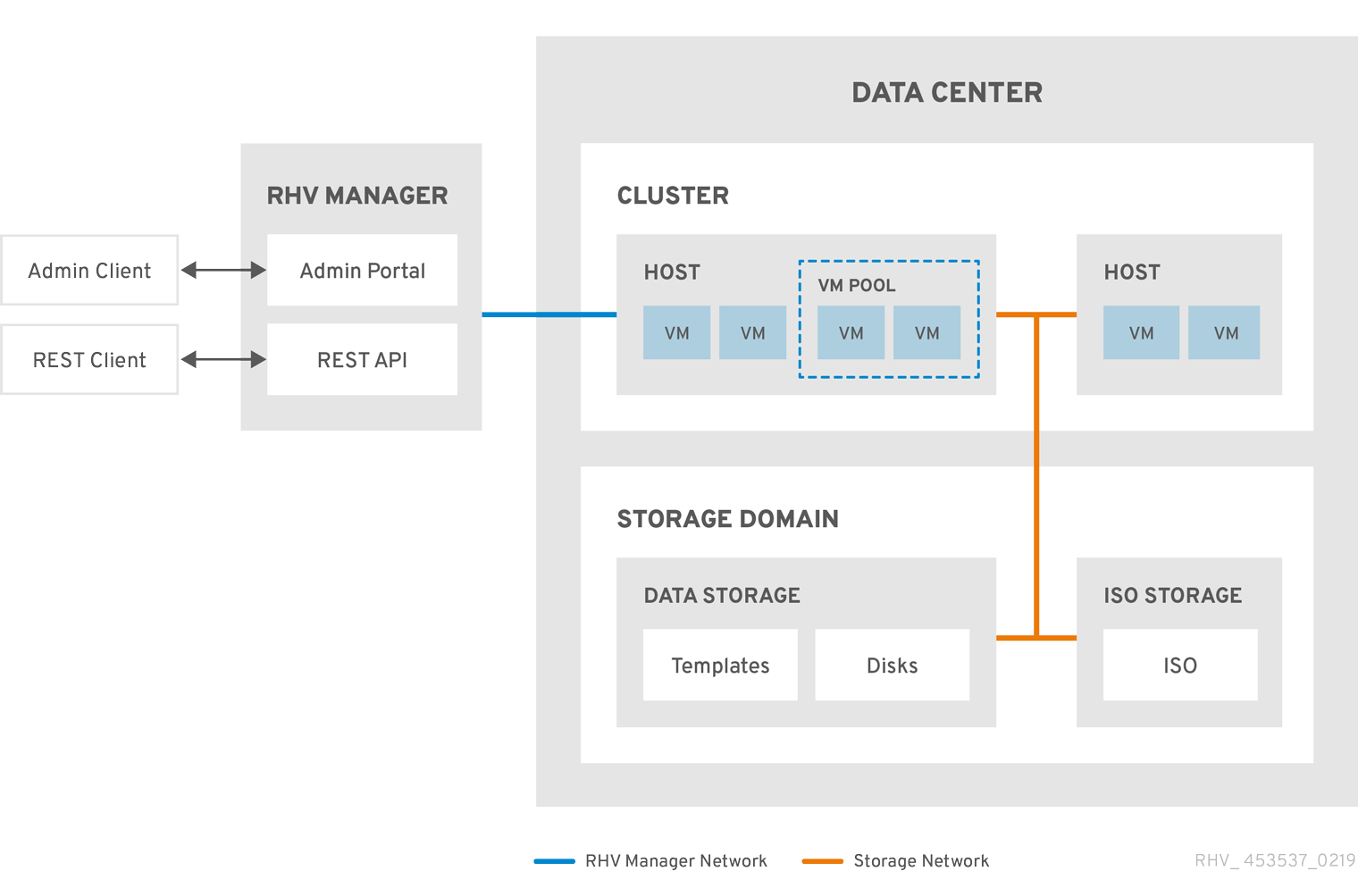

- 2.6.1. About oVirt storage

- 2.6.2. Understanding Storage Domains

- 2.6.3. Preparing and Adding NFS Storage

- 2.6.4. Preparing and adding local storage

- 2.6.5. Preparing and Adding POSIX-compliant File System Storage

- 2.6.6. Preparing and Adding Block Storage

- 2.6.7. Preparing and Adding Gluster Storage

- 2.6.8. Importing Existing Storage Domains

- 2.6.9. Storage Tasks

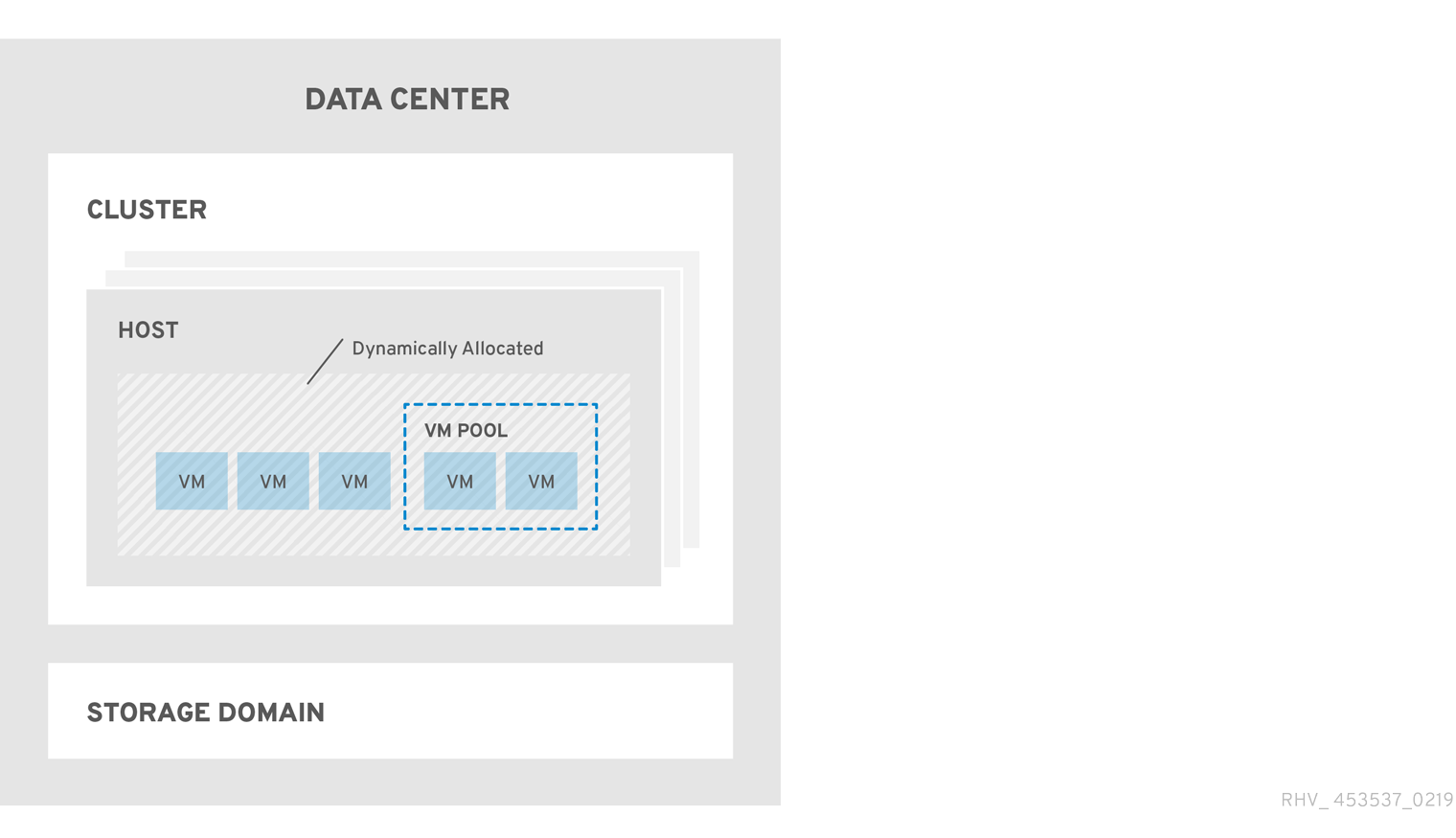

- 2.7. Pools

- 2.8. Virtual Disks

- 2.9. External Providers

- 3. Administering the Environment

- 3.1. Administering the Self-Hosted Engine

- 3.1.1. Maintaining the Self-hosted engine

- 3.1.2. Administering the Engine Virtual Machine

- 3.1.3. Configuring Memory Slots Reserved for the Self-Hosted Engine on Additional Hosts

- 3.1.4. Adding Self-Hosted Engine Nodes to the oVirt Engine

- 3.1.5. Reinstalling an Existing Host as a Self-Hosted Engine Node

- 3.1.6. Booting the Engine Virtual Machine in Rescue Mode

- 3.1.7. Removing a Host from a Self-Hosted Engine Environment

- 3.1.8. Updating a Self-Hosted Engine

- 3.1.9. Changing the FQDN of the Engine in a Self-Hosted Engine

- 3.2. Backups and Migration

- 3.2.1. Backing Up and Restoring the oVirt Engine

- 3.2.2. Migrating the Data Warehouse to a Separate Machine

- 3.2.3. Backing Up and Restoring Virtual Machines Using a Backup Storage Domain

- 3.2.4. Backing Up and Restoring Virtual Machines Using the Backup and Restore API

- 3.2.5. Backing Up and Restoring Virtual Machines Using the Incremental Backup and Restore API

- 3.3. Setting up errata viewing with Red Hat Satellite

- 3.4. Renewing certificates before they expire

- 3.5. Automating Configuration Tasks using Ansible

- 3.6. Users and Roles

- 3.6.1. Introduction to Users

- 3.6.2. Introduction to Directory Servers

- 3.6.3. Configuring an External LDAP Provider

- 3.6.4. Configuring LDAP and Kerberos for Single Sign-on

- 3.6.5. Installing and Configuring Red Hat Single Sign-On

- 3.6.6. User Authorization

- 3.6.7. Administering User Tasks From the Administration Portal

- 3.6.8. Administering User Tasks From the Command Line

- 3.6.9. Configuring Additional Local Domains

- 3.7. Quotas and Service Level Agreement Policy

- 3.7.1. Introduction to Quota

- 3.7.2. Shared Quota and Individually Defined Quota

- 3.7.3. Quota Accounting

- 3.7.4. Enabling and Changing a Quota Mode in a Data Center

- 3.7.5. Creating a New Quota Policy

- 3.7.6. Explanation of Quota Threshold Settings

- 3.7.7. Assigning a Quota to an Object

- 3.7.8. Using Quota to Limit Resources by User

- 3.7.9. Editing Quotas

- 3.7.10. Removing Quotas

- 3.7.11. Service Level Agreement Policy Enforcement

- 3.8. Event Notifications

- 3.9. Utilities

- 3.1. Administering the Self-Hosted Engine

- 4. Gathering Information About the Environment

- 4.1. Monitoring and observability

- 4.2. Log Files

- 4.2.1. Engine Installation Log Files

- 4.2.2. oVirt Engine Log Files

- 4.2.3. SPICE Log Files

- 4.2.4. Host Log Files

- 4.2.5. Setting debug-level logging for oVirt services

- 4.2.6. Main configuration files for oVirt services

- 4.2.7. Setting Up a Host Logging Server

- 4.2.8. Enabling SyslogHandler to pass oVirt Engine logs to a remote syslog server

- Appendix A: VDSM Service and Hooks

- Installing a VDSM hook

- Supported VDSM Events

- The VDSM Hook Environment

- The VDSM Hook Domain XML Object

- Defining Custom Properties

- Setting Virtual Machine Custom Properties

- Evaluating Virtual Machine Custom Properties in a VDSM Hook

- Using the VDSM Hooking Module

- VDSM Hook Execution

- VDSM Hook Return Codes

- VDSM Hook Examples

- Appendix B: Custom Network Properties

- Appendix C: oVirt User Interface Plugins

- Appendix D: oVirt and encrypted communication

- Appendix E: Branding

- Appendix F: System Accounts

- Appendix G: Legal notice

Administration Guide

1. Administering and Maintaining the oVirt Environment

The oVirt environment requires an administrator to keep it running. As an administrator, your tasks include:

-

Managing physical and virtual resources such as hosts and virtual machines. This includes upgrading and adding hosts, importing domains, converting virtual machines created on foreign hypervisors, and managing virtual machine pools.

-

Monitoring the overall system resources for potential problems such as extreme load on one of the hosts, insufficient memory or disk space, and taking any necessary actions (such as migrating virtual machines to other hosts to lessen the load or freeing resources by shutting down machines).

-

Responding to the new requirements of virtual machines (for example, upgrading the operating system or allocating more memory).

-

Managing customized object properties using tags.

-

Managing searches saved as public bookmarks.

-

Managing user setup and setting permission levels.

-

Troubleshooting for specific users or virtual machines for overall system functionality.

-

Generating general and specific reports.

1.1. Global Configuration

Accessed by clicking , the Configure window allows you to configure a number of global resources for your oVirt environment, such as users, roles, system permissions, scheduling policies, instance types, and MAC address pools. This window allows you to customize the way in which users interact with resources in the environment, and provides a central location for configuring options that can be applied to multiple clusters.

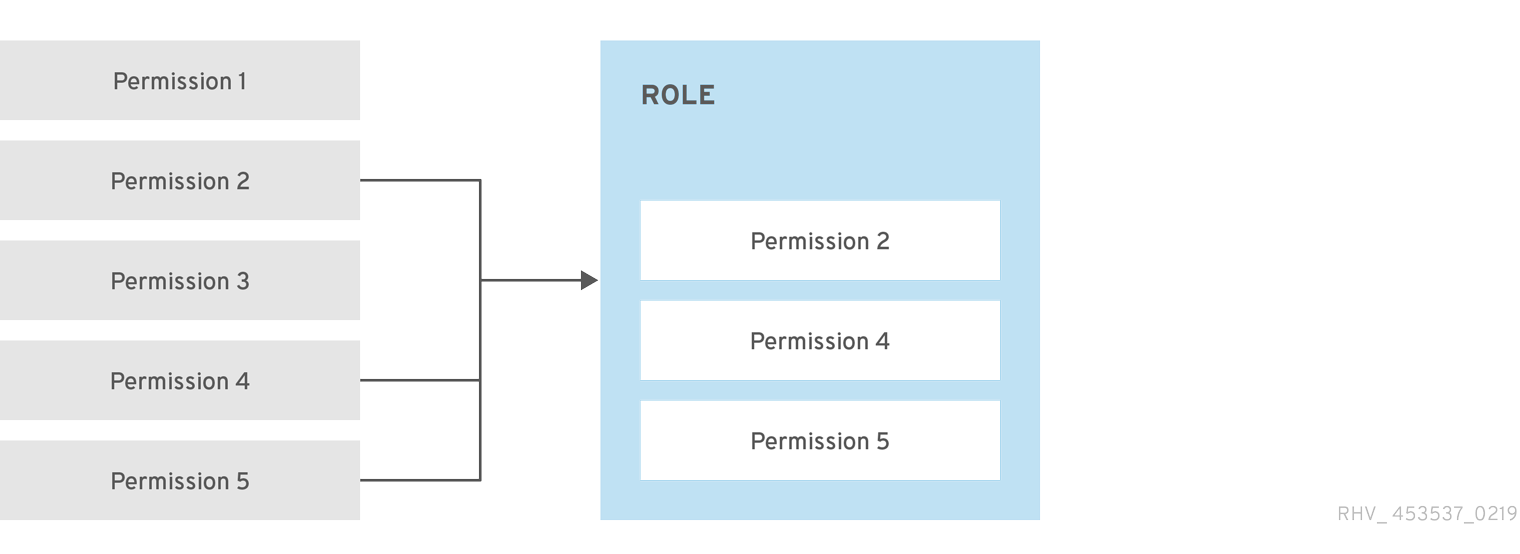

1.1.1. Roles

Roles are predefined sets of privileges that can be configured from oVirt Engine. Roles provide access and management permissions to different levels of resources in the data center, and to specific physical and virtual resources.

With multilevel administration, any permissions which apply to a container object also apply to all individual objects within that container. For example, when a host administrator role is assigned to a user on a specific host, the user gains permissions to perform any of the available host operations, but only on the assigned host. However, if the host administrator role is assigned to a user on a data center, the user gains permissions to perform host operations on all hosts within the cluster of the data center.

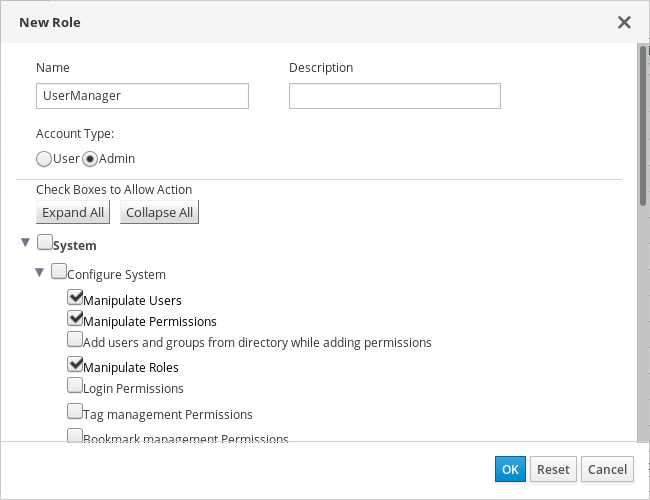

Creating a New Role

If the role you require is not on oVirt’s default list of roles, you can create a new role and customize it to suit your purposes.

-

Click . This opens the Configure window. The Roles tab is selected by default, showing a list of default User and Administrator roles, and any custom roles.

-

Click New.

-

Enter the Name and Description of the new role.

-

Select either Admin or User as the Account Type.

-

Use the Expand All or Collapse All buttons to view more or fewer of the permissions for the listed objects in the Check Boxes to Allow Action list. You can also expand or collapse the options for each object.

-

For each of the objects, select or clear the actions you want to permit or deny for the role you are setting up.

-

Click OK to apply the changes. The new role displays on the list of roles.

Editing or Copying a Role

You can change the settings for roles you have created, but you cannot change default roles. To change default roles, clone and modify them to suit your requirements.

-

Click . This opens the Configure window, which shows a list of default User and Administrator roles, as well as any custom roles.

-

Select the role you wish to change.

-

Click Edit or Copy. This opens the Edit Role or Copy Role window.

-

If necessary, edit the Name and Description of the role.

-

Use the Expand All or Collapse All buttons to view more or fewer of the permissions for the listed objects. You can also expand or collapse the options for each object.

-

For each of the objects, select or clear the actions you wish to permit or deny for the role you are editing.

-

Click OK to apply the changes you have made.

User Role and Authorization Examples

The following examples illustrate how to apply authorization controls for various scenarios, using the different features of the authorization system described in this chapter.

Sarah is the system administrator for the accounts department of a company. All the virtual resources for her department are organized under a oVirt cluster called Accounts. She is assigned the ClusterAdmin role on the accounts cluster. This enables her to manage all virtual machines in the cluster, since the virtual machines are child objects of the cluster. Managing the virtual machines includes editing, adding, or removing virtual resources such as disks, and taking snapshots. It does not allow her to manage any resources outside this cluster. Because ClusterAdmin is an administrator role, it allows her to use the Administration Portal or the VM Portal to manage these resources.

John is a software developer in the accounts department. He uses virtual machines to build and test his software. Sarah has created a virtual desktop called johndesktop for him. John is assigned the UserVmManager role on the johndesktop virtual machine. This allows him to access this single virtual machine using the VM Portal. Because he has UserVmManager permissions, he can modify the virtual machine. Because UserVmManager is a user role, it does not allow him to use the Administration Portal.

Penelope is an office manager. In addition to her own responsibilities, she occasionally helps the HR manager with recruitment tasks, such as scheduling interviews and following up on reference checks. As per corporate policy, Penelope needs to use a particular application for recruitment tasks.

While Penelope has her own machine for office management tasks, she wants to create a separate virtual machine to run the recruitment application. She is assigned PowerUserRole permissions for the data center in which her new virtual machine will reside. This is because to create a new virtual machine, she needs to make changes to several components within the data center, including creating the virtual disk in the storage domain.

Note that this is not the same as assigning DataCenterAdmin privileges to Penelope. As a PowerUser for a data center, Penelope can log in to the VM Portal and perform virtual machine-specific actions on virtual machines within the data center. She cannot perform data center-level operations such as attaching hosts or storage to a data center.

Chris works as the network administrator in the IT department. Her day-to-day responsibilities include creating, manipulating, and removing networks in the department’s oVirt environment. For her role, she requires administrative privileges on the resources and on the networks of each resource. For example, if Chris has NetworkAdmin privileges on the IT department’s data center, she can add and remove networks in the data center, and attach and detach networks for all virtual machines belonging to the data center.

Rachel works in the IT department, and is responsible for managing user accounts in oVirt. She needs permission to add user accounts and assign them the appropriate roles and permissions. She does not use any virtual machines herself, and should not have access to administration of hosts, virtual machines, clusters or data centers. There is no built-in role which provides her with this specific set of permissions. A custom role must be created to define the set of permissions appropriate to Rachel’s position.

The UserManager custom role shown above allows manipulation of users, permissions and roles. These actions are organized under System - the top level object of the hierarchy shown in Object Hierarchy. This means they apply to all other objects in the system. The role is set to have an Account Type of Admin. This means that when she is assigned this role, Rachel can use both the Administration Portal and the VM Portal.

1.1.2. System Permissions

Permissions enable users to perform actions on objects, where objects are either individual objects or container objects. Any permissions that apply to a container object also apply to all members of that container.

User Properties

Roles and permissions are the properties of the user. Roles are predefined sets of privileges that permit access to different levels of physical and virtual resources. Multilevel administration provides a finely grained hierarchy of permissions. For example, a data center administrator has permissions to manage all objects in the data center, while a host administrator has system administrator permissions to a single physical host. A user can have permissions to use a single virtual machine but not make any changes to the virtual machine configurations, while another user can be assigned system permissions to a virtual machine.

User and Administrator Roles

oVirt provides a range of pre-configured roles, from an administrator with system-wide permissions to an end user with access to a single virtual machine. While you cannot change or remove the default roles, you can clone and customize them, or create new roles according to your requirements. There are two types of roles:

-

Administrator Role: Allows access to the Administration Portal for managing physical and virtual resources. An administrator role confers permissions for actions to be performed in the VM Portal; however, it has no bearing on what a user can see in the VM Portal.

-

User Role: Allows access to the VM Portal for managing and accessing virtual machines and templates. A user role determines what a user can see in the VM Portal. Permissions granted to a user with an administrator role are reflected in the actions available to that user in the VM Portal.

User Roles Explained

The table below describes basic user roles which confer permissions to access and configure virtual machines in the VM Portal.

| Role | Privileges | Notes |

|---|---|---|

UserRole |

Can access and use virtual machines and pools. |

Can log in to the VM Portal, use assigned virtual machines and pools, view virtual machine state and details. |

PowerUserRole |

Can create and manage virtual machines and templates. |

Apply this role to a user for the whole environment with the Configure window, or for specific data centers or clusters. For example, if a PowerUserRole is applied on a data center level, the PowerUser can create virtual machines and templates in the data center. |

UserVmManager |

System administrator of a virtual machine. |

Can manage virtual machines and create and use snapshots. A user who creates a virtual machine in the VM Portal is automatically assigned the UserVmManager role on the machine. |

The table below describes advanced user roles which allow you to do more fine tuning of permissions for resources in the VM Portal.

| Role | Privileges | Notes |

|---|---|---|

UserTemplateBasedVm |

Limited privileges to only use Templates. |

Can use templates to create virtual machines. |

DiskOperator |

Virtual disk user. |

Can use, view and edit virtual disks. Inherits permissions to use the virtual machine to which the virtual disk is attached. |

VmCreator |

Can create virtual machines in the VM Portal. |

This role is not applied to a specific virtual machine; apply this role to a user for the whole environment with the Configure window. Alternatively apply this role for specific data centers or clusters. When applying this role to a cluster, you must also apply the DiskCreator role on an entire data center, or on specific storage domains. |

TemplateCreator |

Can create, edit, manage and remove virtual machine templates within assigned resources. |

This role is not applied to a specific template; apply this role to a user for the whole environment with the Configure window. Alternatively apply this role for specific data centers, clusters, or storage domains. |

DiskCreator |

Can create, edit, manage and remove virtual disks within assigned clusters or data centers. |

This role is not applied to a specific virtual disk; apply this role to a user for the whole environment with the Configure window. Alternatively apply this role for specific data centers or storage domains. |

TemplateOwner |

Can edit and delete the template, assign and manage user permissions for the template. |

This role is automatically assigned to the user who creates a template. Other users who do not have TemplateOwner permissions on a template cannot view or use the template. |

VnicProfileUser |

Logical network and network interface user for virtual machine and template. |

Can attach or detach network interfaces from specific logical networks. |

Administrator Roles Explained

The table below describes basic administrator roles which confer permissions to access and configure resources in the Administration Portal.

| Role | Privileges | Notes |

|---|---|---|

SuperUser |

System Administrator of the oVirt environment. |

Has full permissions across all objects and levels, can manage all objects across all data centers. |

ClusterAdmin |

Cluster Administrator. |

Possesses administrative permissions for all objects underneath a specific cluster. |

DataCenterAdmin |

Data Center Administrator. |

Possesses administrative permissions for all objects underneath a specific data center except for storage. |

|

Do not use the administrative user for the directory server as the oVirt administrative user. Create a user in the directory server specifically for use as the oVirt administrative user. |

The table below describes advanced administrator roles which allow you to do more fine tuning of permissions for resources in the Administration Portal.

| Role | Privileges | Notes |

|---|---|---|

TemplateAdmin |

Administrator of a virtual machine template. |

Can create, delete, and configure the storage domains and network details of templates, and move templates between domains. |

StorageAdmin |

Storage Administrator. |

Can create, delete, configure, and manage an assigned storage domain. |

HostAdmin |

Host Administrator. |

Can attach, remove, configure, and manage a specific host. |

NetworkAdmin |

Network Administrator. |

Can configure and manage the network of a particular data center or cluster. A network administrator of a data center or cluster inherits network permissions for virtual pools within the cluster. |

VmPoolAdmin |

System Administrator of a virtual pool. |

Can create, delete, and configure a virtual pool; assign and remove virtual pool users; and perform basic operations on a virtual machine in the pool. |

GlusterAdmin |

Gluster Storage Administrator. |

Can create, delete, configure, and manage Gluster storage volumes. |

VmImporterExporter |

Import and export Administrator of a virtual machine. |

Can import and export virtual machines. Able to view all virtual machines and templates exported by other users. |

Assigning an Administrator or User Role to a Resource

Assign administrator or user roles to resources to allow users to access or manage that resource.

-

Find and click the resource’s name. This opens the details view.

-

Click the Permissions tab to list the assigned users, each user’s role, and the inherited permissions for the selected resource.

-

Click Add.

-

Enter the name or user name of an existing user into the Search text box and click Go. Select a user from the resulting list of possible matches.

-

Select a role from the Role to Assign drop-down list.

-

Click OK.

The user now has the inherited permissions of that role enabled for that resource.

|

Avoid assigning global permissions to regular users on resources such as clusters because permissions are automatically inherited by resources that are lower in a system’s hierarchy. Set Assigning global permissions can cause two problems due to the inheritance of permissions:

Therefore, it is strongly recommended to set |

Removing an Administrator or User Role from a Resource

Remove an administrator or user role from a resource; the user loses the inherited permissions associated with the role for that resource.

-

Find and click the resource’s name. This opens the details view.

-

Click the Permissions tab to list the assigned users, the user’s role, and the inherited permissions for the selected resource.

-

Select the user to remove from the resource.

-

Click Remove.

-

Click OK.

Managing System Permissions for a Data Center

As the SuperUser, the system administrator manages all aspects of the Administration Portal. More specific administrative roles can be assigned to other users. These restricted administrator roles are useful for granting a user administrative privileges that limit them to a specific resource. For example, a DataCenterAdmin role has administrator privileges only for the assigned data center with the exception of the storage for that data center, and a ClusterAdmin has administrator privileges only for the assigned cluster.

A data center administrator is a system administration role for a specific data center only. This is useful in virtualization environments with multiple data centers where each data center requires an administrator. The DataCenterAdmin role is a hierarchical model; a user assigned the data center administrator role for a data center can manage all objects in the data center with the exception of storage for that data center. Use the Configure button in the header bar to assign a data center administrator for all data centers in the environment.

The data center administrator role permits the following actions:

-

Create and remove clusters associated with the data center.

-

Add and remove hosts, virtual machines, and pools associated with the data center.

-

Edit user permissions for virtual machines associated with the data center.

|

You can only assign roles and permissions to existing users. |

You can change the system administrator of a data center by removing the existing system administrator and adding the new system administrator.

Data Center Administrator Roles Explained

Data Center Permission Roles

The table below describes the administrator roles and privileges applicable to data center administration.

| Role | Privileges | Notes |

|---|---|---|

DataCenterAdmin |

Data Center Administrator |

Can use, create, delete, manage all physical and virtual resources within a specific data center except for storage, including clusters, hosts, templates and virtual machines. |

NetworkAdmin |

Network Administrator |

Can configure and manage the network of a particular data center. A network administrator of a data center inherits network permissions for virtual machines within the data center as well. |

Managing System Permissions for a Cluster

As the SuperUser, the system administrator manages all aspects of the Administration Portal. More specific administrative roles can be assigned to other users. These restricted administrator roles are useful for granting a user administrative privileges that limit them to a specific resource. For example, a DataCenterAdmin role has administrator privileges only for the assigned data center with the exception of the storage for that data center, and a ClusterAdmin has administrator privileges only for the assigned cluster.

A cluster administrator is a system administration role for a specific cluster only. This is useful in data centers with multiple clusters, where each cluster requires a system administrator. The ClusterAdmin role is a hierarchical model: a user assigned the cluster administrator role for a cluster can manage all objects in the cluster. Use the Configure button in the header bar to assign a cluster administrator for all clusters in the environment.

The cluster administrator role permits the following actions:

-

Create and remove associated clusters.

-

Add and remove hosts, virtual machines, and pools associated with the cluster.

-

Edit user permissions for virtual machines associated with the cluster.

|

You can only assign roles and permissions to existing users. |

You can also change the system administrator of a cluster by removing the existing system administrator and adding the new system administrator.

Cluster Administrator Roles Explained

Cluster Permission Roles

The table below describes the administrator roles and privileges applicable to cluster administration.

| Role | Privileges | Notes |

|---|---|---|

ClusterAdmin |

Cluster Administrator |

Can use, create, delete, manage all physical and virtual resources in a specific cluster, including hosts, templates and virtual machines. Can configure network properties within the cluster such as designating display networks, or marking a network as required or non-required. However, a ClusterAdmin does not have permissions to attach or detach networks from a cluster, to do so NetworkAdmin permissions are required. |

NetworkAdmin |

Network Administrator |

Can configure and manage the network of a particular cluster. A network administrator of a cluster inherits network permissions for virtual machines within the cluster as well. |

Managing System Permissions for a Network

As the SuperUser, the system administrator manages all aspects of the Administration Portal. More specific administrative roles can be assigned to other users. These restricted administrator roles are useful for granting a user administrative privileges that limit them to a specific resource. For example, a DataCenterAdmin role has administrator privileges only for the assigned data center with the exception of the storage for that data center, and a ClusterAdmin has administrator privileges only for the assigned cluster.

A network administrator is a system administration role that can be applied for a specific network, or for all networks on a data center, cluster, host, virtual machine, or template. A network user can perform limited administration roles, such as viewing and attaching networks on a specific virtual machine or template. You can use the Configure button in the header bar to assign a network administrator for all networks in the environment.

The network administrator role permits the following actions:

-

Create, edit and remove networks.

-

Edit the configuration of the network, including configuring port mirroring.

-

Attach and detach networks from resources including clusters and virtual machines.

The user who creates a network is automatically assigned NetworkAdmin permissions on the created network. You can also change the administrator of a network by removing the existing administrator and adding the new administrator.

Network Administrator and User Roles Explained

Network Permission Roles

The table below describes the administrator and user roles and privileges applicable to network administration.

| Role | Privileges | Notes |

|---|---|---|

NetworkAdmin |

Network Administrator for data center, cluster, host, virtual machine, or template. The user who creates a network is automatically assigned NetworkAdmin permissions on the created network. |

Can configure and manage the network of a particular data center, cluster, host, virtual machine, or template. A network administrator of a data center or cluster inherits network permissions for virtual pools within the cluster. To configure port mirroring on a virtual machine network, apply the NetworkAdmin role on the network and the UserVmManager role on the virtual machine. |

VnicProfileUser |

Logical network and network interface user for virtual machine and template. |

Can attach or detach network interfaces from specific logical networks. |

Managing System Permissions for a Host

As the SuperUser, the system administrator manages all aspects of the Administration Portal. More specific administrative roles can be assigned to other users. These restricted administrator roles are useful for granting a user administrative privileges that limit them to a specific resource. For example, a DataCenterAdmin role has administrator privileges only for the assigned data center with the exception of the storage for that data center, and a ClusterAdmin has administrator privileges only for the assigned cluster.

A host administrator is a system administration role for a specific host only. This is useful in clusters with multiple hosts, where each host requires a system administrator. You can use the Configure button in the header bar to assign a host administrator for all hosts in the environment.

The host administrator role permits the following actions:

-

Edit the configuration of the host.

-

Set up the logical networks.

-

Remove the host.

You can also change the system administrator of a host by removing the existing system administrator and adding the new system administrator.

Host Administrator Roles Explained

Host Permission Roles

The table below describes the administrator roles and privileges applicable to host administration.

| Role | Privileges | Notes |

|---|---|---|

HostAdmin |

Host Administrator |

Can configure, manage, and remove a specific host. Can also perform network-related operations on a specific host. |

Managing System Permissions for a Storage Domain

As the SuperUser, the system administrator manages all aspects of the Administration Portal. More specific administrative roles can be assigned to other users. These restricted administrator roles are useful for granting a user administrative privileges that limit them to a specific resource. For example, a DataCenterAdmin role has administrator privileges only for the assigned data center with the exception of the storage for that data center, and a ClusterAdmin has administrator privileges only for the assigned cluster.

A storage administrator is a system administration role for a specific storage domain only. This is useful in data centers with multiple storage domains, where each storage domain requires a system administrator. Use the Configure button in the header bar to assign a storage administrator for all storage domains in the environment.

The storage domain administrator role permits the following actions:

-

Edit the configuration of the storage domain.

-

Move the storage domain into maintenance mode.

-

Remove the storage domain.

|

You can only assign roles and permissions to existing users. |

You can also change the system administrator of a storage domain by removing the existing system administrator and adding the new system administrator.

Storage Administrator Roles Explained

Storage Domain Permission Roles

The table below describes the administrator roles and privileges applicable to storage domain administration.

| Role | Privileges | Notes |

|---|---|---|

StorageAdmin |

Storage Administrator |

Can create, delete, configure and manage a specific storage domain. |

GlusterAdmin |

Gluster Storage Administrator |

Can create, delete, configure and manage Gluster storage volumes. |

Managing System Permissions for a Virtual Machine Pool

As the SuperUser, the system administrator manages all aspects of the Administration Portal. More specific administrative roles can be assigned to other users. These restricted administrator roles are useful for granting a user administrative privileges that limit them to a specific resource. For example, a DataCenterAdmin role has administrator privileges only for the assigned data center with the exception of the storage for that data center, and a ClusterAdmin has administrator privileges only for the assigned cluster.

A virtual machine pool administrator is a system administration role for virtual machine pools in a data center. This role can be applied to specific virtual machine pools, to a data center, or to the whole virtualized environment; this is useful to allow different users to manage certain virtual machine pool resources.

The virtual machine pool administrator role permits the following actions:

-

Create, edit, and remove pools.

-

Add and detach virtual machines from the pool.

|

You can only assign roles and permissions to existing users. |

Virtual Machine Pool Administrator Roles Explained

Pool Permission Roles

The table below describes the administrator roles and privileges applicable to pool administration.

| Role | Privileges | Notes |

|---|---|---|

VmPoolAdmin |

System Administrator role of a virtual pool. |

Can create, delete, and configure a virtual pool, assign and remove virtual pool users, and perform basic operations on a virtual machine. |

ClusterAdmin |

Cluster Administrator |

Can use, create, delete, manage all virtual machine pools in a specific cluster. |

Managing System Permissions for a Virtual Disk

As the SuperUser, the system administrator manages all aspects of the Administration Portal. More specific administrative roles can be assigned to other users. These restricted administrator roles are useful for granting a user administrative privileges that limit them to a specific resource. For example, a DataCenterAdmin role has administrator privileges only for the assigned data center with the exception of the storage for that data center, and a ClusterAdmin has administrator privileges only for the assigned cluster.

oVirt Engine provides two default virtual disk user roles, but no default virtual disk administrator roles. One of these user roles, the DiskCreator role, enables the administration of virtual disks from the VM Portal. This role can be applied to specific virtual machines, to a data center, to a specific storage domain, or to the whole virtualized environment; this is useful to allow different users to manage different virtual resources.

The virtual disk creator role permits the following actions:

-

Create, edit, and remove virtual disks associated with a virtual machine or other resources.

-

Edit user permissions for virtual disks.

|

You can only assign roles and permissions to existing users. |

Virtual Disk User Roles Explained

Virtual Disk User Permission Roles

The table below describes the user roles and privileges applicable to using and administrating virtual disks in the VM Portal.

| Role | Privileges | Notes |

|---|---|---|

DiskOperator |

Virtual disk user. |

Can use, view and edit virtual disks. Inherits permissions to use the virtual machine to which the virtual disk is attached. |

DiskCreator |

Can create, edit, manage and remove virtual disks within assigned clusters or data centers. |

This role is not applied to a specific virtual disk; apply this role to a user for the whole environment with the Configure window. Alternatively apply this role for specific data centers, clusters, or storage domains. |

Setting a Legacy SPICE Cipher

SPICE consoles use FIPS-compliant encryption by default, with a cipher string. The default SPICE cipher string is:

kECDHE+FIPS:kDHE+FIPS:kRSA+FIPS:!eNULL:!aNULL

This string is generally sufficient. However, if you have a virtual machine with an older operating system or SPICE client, where either one or the other does not support FIPS-compliant encryption, you must use a weaker cipher string. Otherwise, a connection security error may occur if you install a new cluster or a new host in an existing cluster and try to connect to that virtual machine.

You can change the cipher string by using an Ansible playbook.

Changing the cipher string

-

On the Engine machine, create a file in the directory

/usr/share/ovirt-engine/playbooks. For example:# vim /usr/share/ovirt-engine/playbooks/change-spice-cipher.yml -

Enter the following in the file and save it:

name: oVirt - setup weaker SPICE encryption for old clients hosts: hostname vars: host_deploy_spice_cipher_string: 'DEFAULT:-RC4:-3DES:-DES' roles: - ovirt-host-deploy-spice-encryption -

Run the file you just created:

# ansible-playbook -l hostname /usr/share/ovirt-engine/playbooks/change-spice-cipher.yml

Alternatively, you can reconfigure the host with the Ansible playbook ovirt-host-deploy using the --extra-vars option with the variable host_deploy_spice_cipher_string:

# ansible-playbook -l hostname \

--extra-vars host_deploy_spice_cipher_string=”DEFAULT:-RC4:-3DES:-DES” \

/usr/share/ovirt-engine/playbooks/ovirt-host-deploy.yml1.1.3. Scheduling Policies

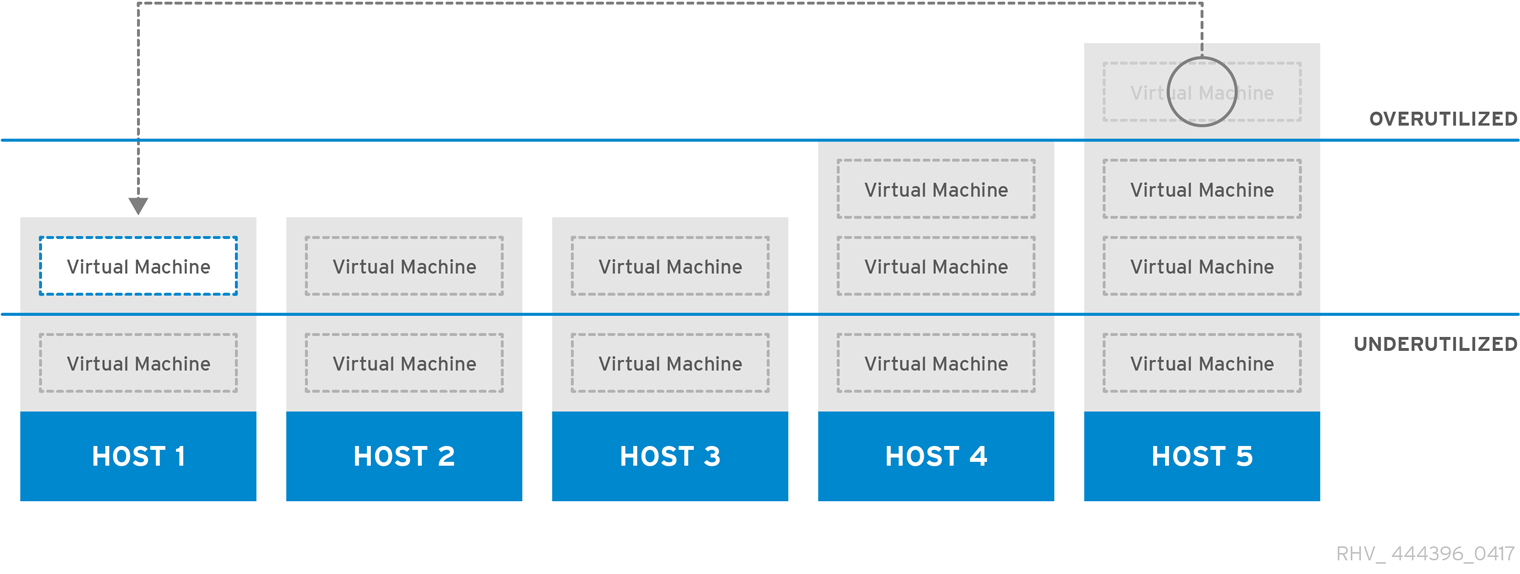

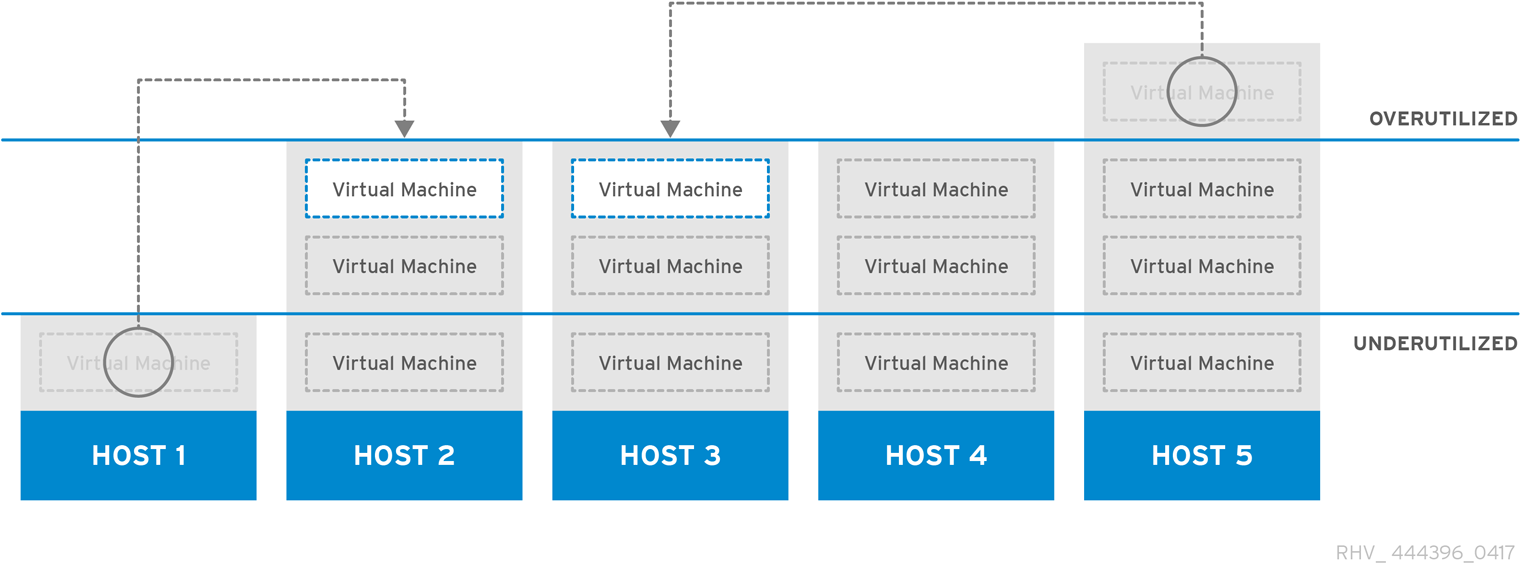

A scheduling policy is a set of rules that defines the logic by which virtual machines are distributed amongst hosts in the cluster that scheduling policy is applied to. Scheduling policies determine this logic via a combination of filters, weightings, and a load balancing policy. The filter modules apply hard enforcement and filter out hosts that do not meet the conditions specified by that filter. The weights modules apply soft enforcement, and are used to control the relative priority of factors considered when determining the hosts in a cluster on which a virtual machine can run.

The oVirt Engine provides five default scheduling policies: Evenly_Distributed, Cluster_Maintenance, None, Power_Saving, and VM_Evenly_Distributed. You can also define new scheduling policies that provide fine-grained control over the distribution of virtual machines. Regardless of the scheduling policy, a virtual machine will not start on a host with an overloaded CPU. By default, a host’s CPU is considered overloaded if it has a load of more than 80% for 2 minutes, but these values can be changed using scheduling policies. See Scheduling Policies in the Administration Guide for more information about the properties of each scheduling policy.

The Evenly_Distributed scheduling policy distributes the memory and CPU processing load evenly across all hosts in the cluster. Additional virtual machines attached to a host will not start if that host has reached the defined CpuOverCommitDurationMinutes, HighUtilization, VCpuToPhysicalCpuRatio, or MaxFreeMemoryForOverUtilized.

The VM_Evenly_Distributed scheduling policy distributes virtual machines evenly between hosts based on a count of the virtual machines. The cluster is considered unbalanced if any host is running more virtual machines than the HighVmCount and there is at least one host with a virtual machine count that falls outside of the MigrationThreshold.

The Power_Saving scheduling policy distributes the memory and CPU processing load across a subset of available hosts to reduce power consumption on underutilized hosts. Hosts with a CPU load below the low utilization value for longer than the defined time interval will migrate all virtual machines to other hosts so that it can be powered down. Additional virtual machines attached to a host will not start if that host has reached the defined high utilization value.

Set the None policy to have no load or power sharing between hosts for running virtual machines. This is the default mode. When a virtual machine is started, the memory and CPU processing load is spread evenly across all hosts in the cluster. Additional virtual machines attached to a host will not start if that host has reached the defined CpuOverCommitDurationMinutes, HighUtilization, or MaxFreeMemoryForOverUtilized.

The Cluster_Maintenance scheduling policy limits activity in a cluster during maintenance tasks. When the Cluster_Maintenance policy is set, no new virtual machines may be started, except highly available virtual machines. If host failure occurs, highly available virtual machines will restart properly and any virtual machine can migrate.

Creating a Scheduling Policy

You can create new scheduling policies to control the logic by which virtual machines are distributed amongst a given cluster in your oVirt environment.

-

Click .

-

Click the Scheduling Policies tab.

-

Click New.

-

Enter a Name and Description for the scheduling policy.

-

Configure filter modules:

-

In the Filter Modules section, drag and drop the preferred filter modules to apply to the scheduling policy from the Disabled Filters section into the Enabled Filters section.

-

Specific filter modules can also be set as the First, to be given highest priority, or Last, to be given lowest priority, for basic optimization. To set the priority, right-click any filter module, hover the cursor over Position and select First or Last.

-

-

Configure weight modules:

-

In the Weights Modules section, drag and drop the preferred weights modules to apply to the scheduling policy from the Disabled Weights section into the Enabled Weights & Factors section.

-

Use the + and - buttons to the left of the enabled weight modules to increase or decrease the weight of those modules.

-

-

Specify a load balancing policy:

-

From the drop-down menu in the Load Balancer section, select the load balancing policy to apply to the scheduling policy.

-

From the drop-down menu in the Properties section, select a load balancing property to apply to the scheduling policy and use the text field to the right of that property to specify a value.

-

Use the + and - buttons to add or remove additional properties.

-

-

Click OK.

Explanation of Settings in the New Scheduling Policy and Edit Scheduling Policy Window

The following details the different options available in the New Scheduling Policy and Edit Scheduling Policy windows.

Name: The name of the scheduling policy. This is the name used to refer to the scheduling policy in the oVirt Engine.

Description: A description of the scheduling policy. This field is recommended but not mandatory.

Filter Modules: A set of filters for controlling the hosts on which a virtual machine in a cluster can run. Enabling a filter will filter out hosts that do not meet the conditions specified by that filter, as outlined below:

| Filter Modules marked with represent units that are mandatory since 4.4.0. From that version onward these are always enabled and will not be displayed in the UI. |

| Filter Name | Description |

|---|---|

|

Virtual machines being started on the host that are not configured for high availability filter out the host |

|

Hosts which do not satisfy the CPU pinning definition. |

|

Prevents migration to the same host. |

|

Hosts with CPU usage that is above the defined HighUtilization threshold for the interval defined by the CpuOverCommitDurationMinutes. |

|

Hosts other than the host to which the virtual machine is pinned. |

|

Hosts that do not meet the CPU topology of the virtual machine. |

|

Hosts that do not meet the affinity rules defined for the virtual machine. |

|

Hosts that do not have NUMA nodes that can accommodate the virtual machine vNUMA nodes in terms of resources. |

|

Hosts that are running an earlier version of the operating system than the host that the virtual machine currently runs on. |

|

Hosts that do not provide the required mediated device (mDev). |

|

Hosts that do not have sufficient memory to run the virtual machine. |

|

Hosts with fewer CPUs than the number assigned to the virtual machine. |

|

Reserves space for the Engine virtual machine on a specified number of self-hosted engine nodes. |

|

Hosts that are not swapping within the threshold. |

|

Hosts that do not support virtual machines configured with storage leases. |

|

Group of hosts that do not meet the conditions specified for a virtual machine that is a member of an affinity group. For example, that virtual machines in an affinity group must run on one of the hosts in a group or on a separate host that is excluded from the group. |

|

Hosts that do not support host devices required by the virtual machine. |

|

Forces the Engine virtual machine in a self-hosted engine environment to run only on hosts with a positive high availability score. |

|

Hosts which do not have proper emulated machine support. |

|

Hosts that do not meet the required number of Huge Pages needed for the virtual machine’s memory. |

|

Hosts that do not have virtual machines with the same TSC frequency as the host currently running the virtual machine. |

|

Hosts on which networks required by the network interface controller of a virtual machine are not installed, or on which the cluster’s display network is not installed. |

|

Hosts that do not have the correct cluster compatibility version support. |

|

Runs VMs only on hosts with a hooks required by VM’s configuration |

Weight Modules: A set of weightings for controlling the relative priority of factors considered when determining the hosts in a cluster on which a virtual machine can run.

| Weight Name | Description |

|---|---|

|

Weights hosts in accordance with the affinity groups defined for virtual machines. This weight module determines how likely virtual machines in an affinity group are to run on the same host or on separate hosts in accordance with the parameters of that affinity group. |

|

Weight hosts in accordance with their operating system version. The weight penalizes hosts with earlier operating systems more than hosts with the same operating system as the host that the virtual machine is currently running on. This ensures that priority is always given to hosts with later operating systems. |

|

Weights hosts in accordance with their CPU usage, giving priority to hosts with lower CPU usage. |

|

Prefers hosts that have more or an equal number of sockets, cores and threads than the VM. |

|

Weights hosts in accordance with their high availability score. |

|

Weights hosts in accordance with their CPU usage, giving priority to hosts with higher CPU usage. |

|

Weights hosts in accordance to pinning compatibility. When a virtual machine has both vNUMA and pinning defined, this weight module gives preference to hosts whose CPU pinning does not clash with the vNUMA pinning. |

|

Weights hosts in accordance with the affinity groups defined for virtual machines. This weight module determines how likely virtual machines in an affinity group are to run on one of the hosts in a group or on a separate host that is excluded from the group. |

|

Weights hosts in accordance with the number of virtual machines running on those hosts. |

|

Weights hosts in accordance with their high availability score. |

|

Weights hosts in accordance with their memory usage, giving priority to hosts with higher available memory. |

|

Weights hosts in accordance to whether a virtual machine fits into a single NUMA node. When a virtual machine does not have vNUMA defined, this weight module gives preference to hosts that can fit the virtual machine into a single physical NUMA. |

|

Preferred hosts have priority during virtual machine setup. |

|

Weights hosts in accordance with their memory usage, giving priority to hosts with higher memory usage. |

Load Balancer: This drop-down menu allows you to select a load balancing module to apply. Load balancing modules determine the logic used to migrate virtual machines from hosts experiencing high usage to hosts experiencing lower usage.

Properties: This drop-down menu allows you to add or remove properties for load balancing modules, and is only available when you have selected a load balancing module for the scheduling policy. No properties are defined by default, and the properties that are available are specific to the load balancing module that is selected. Use the + and - buttons to add or remove additional properties to or from the load balancing module.

1.1.4. Instance Types

Instance types can be used to define the hardware configuration of a virtual machine. Selecting an instance type when creating or editing a virtual machine will automatically fill in the hardware configuration fields. This allows users to create multiple virtual machines with the same hardware configuration without having to manually fill in every field.

| Support for instance types is now deprecated, and will be removed in a future release. |

A set of predefined instance types are available by default, as outlined in the following table:

| Name | Memory | vCPUs |

|---|---|---|

Tiny |

512 MB |

1 |

Small |

2 GB |

1 |

Medium |

4 GB |

2 |

Large |

8 GB |

2 |

XLarge |

16 GB |

4 |

Administrators can also create, edit, and remove instance types from the Instance Types tab of the Configure window.

Fields in the New Virtual Machine and Edit Virtual Machine windows that are bound to an instance type have a chain link image next to them ( ). If the value of one of these fields is changed, the virtual machine will be detached from the instance type, changing to Custom, and the chain will appear broken (

). If the value of one of these fields is changed, the virtual machine will be detached from the instance type, changing to Custom, and the chain will appear broken ( ). However, if the value is changed back, the chain will relink and the instance type will move back to the selected one.

). However, if the value is changed back, the chain will relink and the instance type will move back to the selected one.

Creating Instance Types

Administrators can create new instance types, which can then be selected by users when creating or editing virtual machines.

-

Click .

-

Click the Instance Types tab.

-

Click New.

-

Enter a Name and Description for the instance type.

-

Click Show Advanced Options and configure the instance type’s settings as required. The settings that appear in the New Instance Type window are identical to those in the New Virtual Machine window, but with the relevant fields only. See Explanation of Settings in the New Virtual Machine and Edit Virtual Machine Windows in the Virtual Machine Management Guide.

-

Click OK.

The new instance type will appear in the Instance Types tab in the Configure window, and can be selected from the Instance Type drop-down list when creating or editing a virtual machine.

Editing Instance Types

Administrators can edit existing instance types from the Configure window.

-

Click .

-

Click the Instance Types tab.

-

Select the instance type to be edited.

-

Click Edit.

-

Change the settings as required.

-

Click OK.

The configuration of the instance type is updated. When a new virtual machine based on this instance type is created, or when an existing virtual machine based on this instance type is updated, the new configuration is applied.

Existing virtual machines based on this instance type will display fields, marked with a chain icon, that will be updated. If the existing virtual machines were running when the instance type was changed, the orange Pending Changes icon will appear beside them and the fields with the chain icon will be updated at the next restart.

Removing Instance Types

-

Click .

-

Click the Instance Types tab.

-

Select the instance type to be removed.

-

Click Remove.

-

If any virtual machines are based on the instance type to be removed, a warning window listing the attached virtual machines will appear. To continue removing the instance type, select the Approve Operation check box. Otherwise click Cancel.

-

Click OK.

The instance type is removed from the Instance Types list and can no longer be used when creating a new virtual machine. Any virtual machines that were attached to the removed instance type will now be attached to Custom (no instance type).

1.1.5. MAC Address Pools

MAC address pools define the range(s) of MAC addresses allocated for each cluster. A MAC address pool is specified for each cluster. By using MAC address pools, oVirt can automatically generate and assign MAC addresses to new virtual network devices, which helps to prevent MAC address duplication. MAC address pools are more memory efficient when all MAC addresses related to a cluster are within the range for the assigned MAC address pool.

The same MAC address pool can be shared by multiple clusters, but each cluster has a single MAC address pool assigned. A default MAC address pool is created by oVirt and is used if another MAC address pool is not assigned. For more information about assigning MAC address pools to clusters see Creating a New Cluster.

|

If more than one oVirt cluster shares a network, do not rely solely on the default MAC address pool because the virtual machines of each cluster will try to use the same range of MAC addresses, leading to conflicts. To avoid MAC address conflicts, check the MAC address pool ranges to ensure that each cluster is assigned a unique MAC address range. |

The MAC address pool assigns the next available MAC address following the last address that was returned to the pool. If there are no further addresses left in the range, the search starts again from the beginning of the range. If there are multiple MAC address ranges with available MAC addresses defined in a single MAC address pool, the ranges take turns in serving incoming requests in the same way available MAC addresses are selected.

Creating MAC Address Pools

You can create new MAC address pools.

-

Click .

-

Click the MAC Address Pools tab.

-

Click Add.

-

Enter the Name and Description of the new MAC address pool.

-

Select the Allow Duplicates check box to allow a MAC address to be used multiple times in a pool. The MAC address pool will not automatically use a duplicate MAC address, but enabling the duplicates option means a user can manually use a duplicate MAC address.

If one MAC address pool has duplicates disabled, and another has duplicates enabled, each MAC address can be used once in the pool with duplicates disabled but can be used multiple times in the pool with duplicates enabled.

-

Enter the required MAC Address Ranges. To enter multiple ranges click the plus button next to the From and To fields.

-

Click OK.

Editing MAC Address Pools

You can edit MAC address pools to change the details, including the range of MAC addresses available in the pool and whether duplicates are allowed.

-

Click .

-

Click the MAC Address Pools tab.

-

Select the MAC address pool to be edited.

-

Click Edit.

-

Change the Name, Description, Allow Duplicates, and MAC Address Ranges fields as required.

When a MAC address range is updated, the MAC addresses of existing NICs are not reassigned. MAC addresses that were already assigned, but are outside of the new MAC address range, are added as user-specified MAC addresses and are still tracked by that MAC address pool.

-

Click OK.

Editing MAC Address Pool Permissions

After a MAC address pool has been created, you can edit its user permissions. The user permissions control which data centers can use the MAC address pool. See Roles for more information on adding new user permissions.

-

Click .

-

Click the MAC Address Pools tab.

-

Select the required MAC address pool.

-

Edit the user permissions for the MAC address pool:

-

To add user permissions to a MAC address pool:

-

Click Add in the user permissions pane at the bottom of the Configure window.

-

Search for and select the required users.

-

Select the required role from the Role to Assign drop-down list.

-

Click OK to add the user permissions.

-

-

To remove user permissions from a MAC address pool:

-

Select the user permission to be removed in the user permissions pane at the bottom of the Configure window.

-

Click Remove to remove the user permissions.

-

-

Removing MAC Address Pools

You can remove a created MAC address pool if the pool is not associated with a cluster, but the default MAC address pool cannot be removed.

-

Click .

-

Click the MAC Address Pools tab.

-

Select the MAC address pool to be removed.

-

Click the Remove.

-

Click OK.

1.2. Dashboard

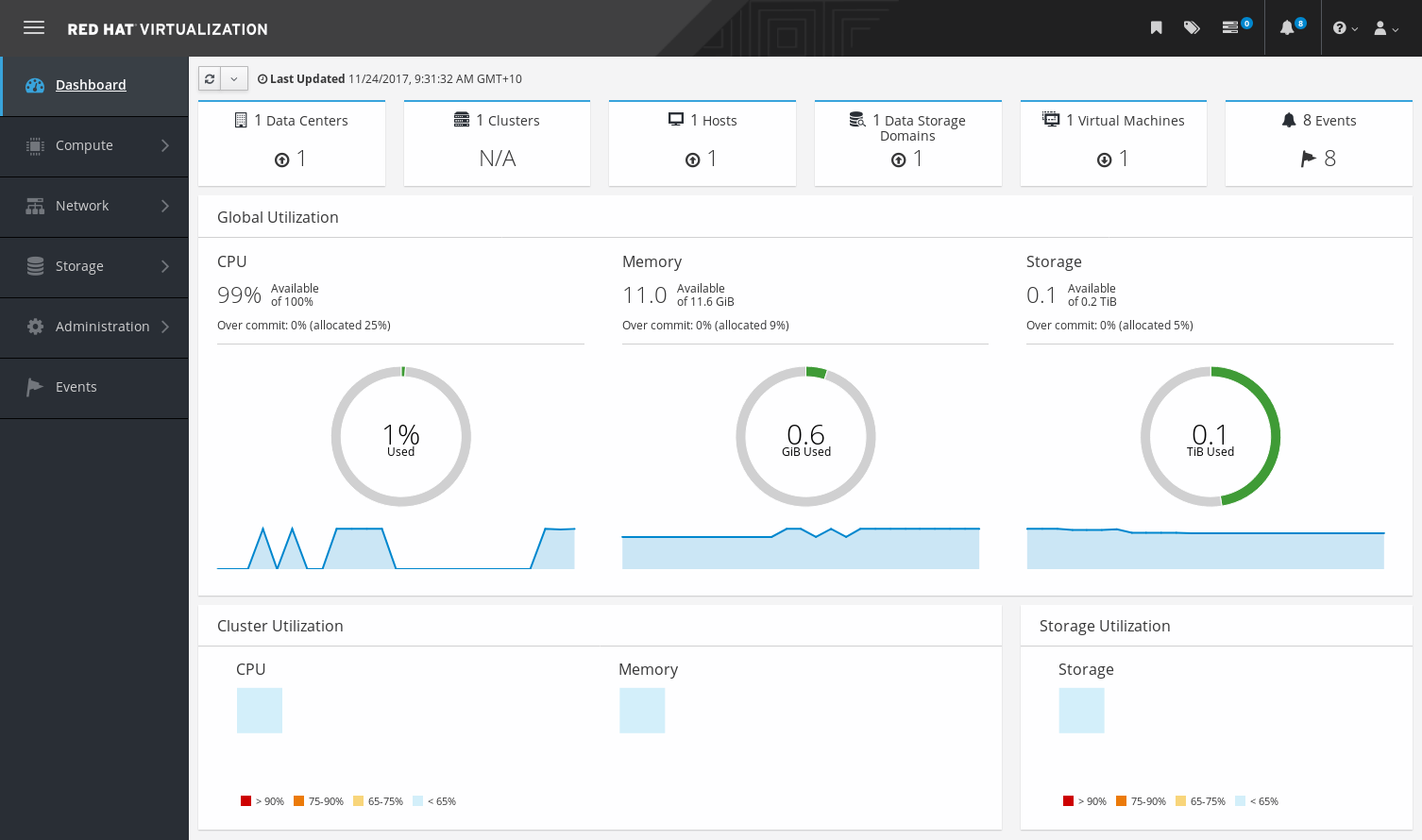

The Dashboard provides an overview of the oVirt system status by displaying a summary of oVirt’s resources and utilization. This summary can alert you to a problem and allows you to analyze the problem area.

The information in the dashboard is updated every 15 minutes by default from Data Warehouse, and every 15 seconds by default by the Engine API, or whenever the Dashboard is refreshed. The Dashboard is refreshed when the user changes back from another page or when manually refreshed. The Dashboard does not automatically refresh. The inventory card information is supplied by the Engine API and the utilization information is supplied by Data Warehouse. The Dashboard is implemented as a UI plugin component, which is automatically installed and upgraded alongside the Engine.

1.2.1. Prerequisites

The Dashboard requires that Data Warehouse is installed and configured. See Installing and Configuring Data Warehouse in the Data Warehouse Guide.

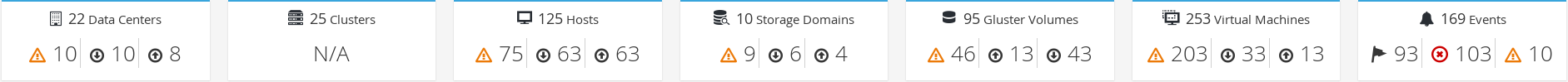

1.2.2. Global Inventory

The top section of the Dashboard provides a global inventory of the oVirt resources and includes items for data centers, clusters, hosts, storage domains, virtual machines, and events. Icons show the status of each resource and numbers show the quantity of the each resource with that status.

The title shows the number of a type of resource and their status is displayed below the title. Clicking on the resource title navigates to the related page in the oVirt Engine. The status for Clusters is always displayed as N/A.

| Icon | Status |

|---|---|

|

None of that resource added to oVirt. |

|

Shows the number of a resource with a warning status. Clicking on the icon navigates to the appropriate page with the search limited to that resource with a warning status. The search is limited differently for each resource:

|

|

Shows the number of a resource with an up status. Clicking on the icon navigates to the appropriate page with the search limited to resources that are up. |

|

Shows the number of a resource with a down status. Clicking on the icon navigates to the appropriate page with the search limited to resources with a down status. The search is limited differently for each resource:

|

images:images/Dashboard_Alert.png[title="Alert icon"] |

Shows the number of events with an alert status. Clicking on the icon navigates to Events with the search limited to events with the severity of alert. |

images:images/Dashboard_Error.png[title="Error icon"] |

Shows the number of events with an error status. Clicking on the icon navigates to Events with the search limited to events with the severity of error. |

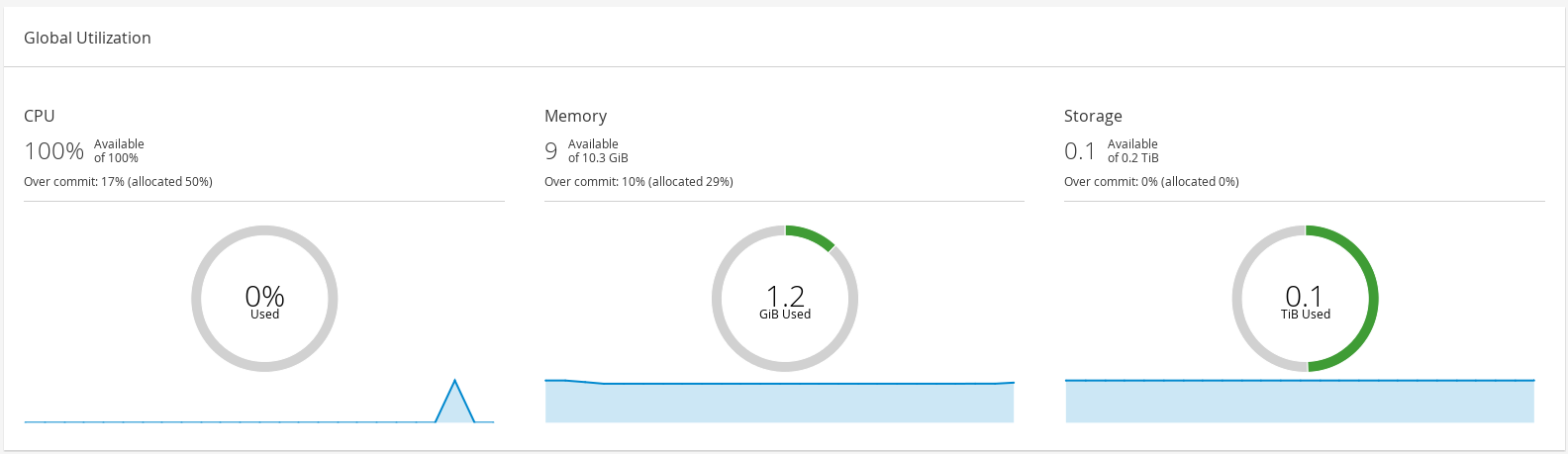

1.2.3. Global Utilization

The Global Utilization section shows the system utilization of the CPU, Memory and Storage.

-

The top section shows the percentage of the available CPU, memory or storage and the over commit ratio. For example, the over commit ratio for the CPU is calculated by dividing the number of virtual cores by the number of physical cores that are available for the running virtual machines based on the latest data in Data Warehouse.

-

The donut displays the usage in percentage for the CPU, memory or storage and shows the average usage for all hosts based on the average usage in the last 5 minutes. Hovering over a section of the donut will display the value of the selected section.

-

The line graph at the bottom displays the trend in the last 24 hours. Each data point shows the average usage for a specific hour. Hovering over a point on the graph displays the time and the percentage used for the CPU graph and the amount of usage for the memory and storage graphs.

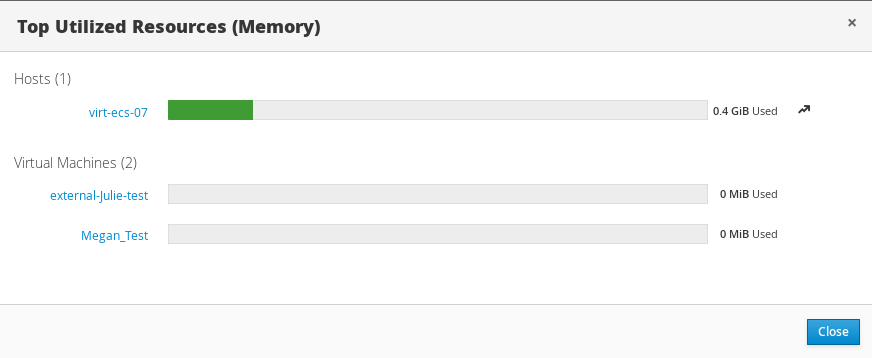

Top Utilized Resources

Clicking the donut in the global utilization section of the Dashboard will display a list of the top utilized resources for the CPU, memory or storage. For CPU and memory the pop-up shows a list of the ten hosts and virtual machines with the highest usage. For storage the pop-up shows a list of the top ten utilized storage domains and virtual machines. The arrow to the right of the usage bar shows the trend of usage for that resource in the last minute.

1.2.4. Cluster Utilization

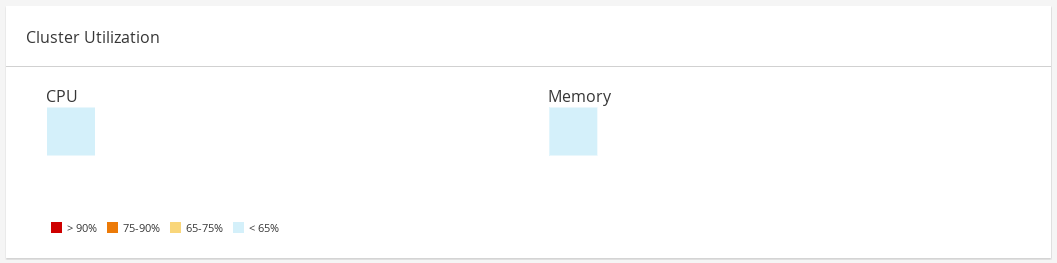

The Cluster Utilization section shows the cluster utilization for the CPU and memory in a heatmap.

CPU

The heatmap of the CPU utilization for a specific cluster that shows the average utilization of the CPU for the last 24 hours. Hovering over the heatmap displays the cluster name. Clicking on the heatmap navigates to and displays the results of a search on a specific cluster sorted by CPU utilization. The formula used to calculate the usage of the CPU by the cluster is the average host CPU utilization in the cluster. This is calculated by using the average host CPU utilization for each host over the last 24 hours to find the total average usage of the CPU by the cluster.

Memory

The heatmap of the memory utilization for a specific cluster that shows the average utilization of the memory for the last 24 hours. Hovering over the heatmap displays the cluster name. Clicking on the heatmap navigates to and displays the results of a search on a specific cluster sorted by memory usage. The formula used to calculate the memory usage by the cluster is the total utilization of the memory in the cluster in GB. This is calculated by using the average host memory utilization for each host over the last 24 hours to find the total average usage of memory by the cluster.

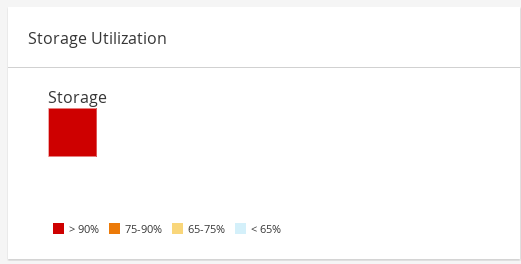

1.2.5. Storage Utilization

The Storage Utilization section shows the storage utilization in a heatmap.

The heatmap shows the average utilization of the storage for the last 24 hours. The formula used to calculate the storage usage by the cluster is the total utilization of the storage in the cluster. This is calculated by using the average storage utilization for each host over the last 24 hours to find the total average usage of the storage by the cluster. Hovering over the heatmap displays the storage domain name. Clicking on the heatmap navigates to with the storage domains sorted by utilization.

1.3. Searches

1.3.1. Performing Searches in oVirt

The Administration Portal allows you to manage thousands of resources, such as virtual machines, hosts, users, and more. To perform a search, enter the search query (free-text or syntax-based) into the search bar, available on the main page for each resource. Search queries can be saved as bookmarks for future reuse, so you do not have to reenter a search query each time the specific search results are required. Searches are not case sensitive.

1.3.2. Search Syntax and Examples

The syntax of the search queries for oVirt resources is as follows:

result type: {criteria} [sortby sort_spec]

Syntax Examples

The following examples describe how the search query is used and help you to understand how oVirt assists with building search queries.

| Example | Result |

|---|---|

Hosts: Vms.status = up page 2 |

Displays page 2 of a list of all hosts running virtual machines that are up. |

Vms: domain = qa.company.com |

Displays a list of all virtual machines running on the specified domain. |

Vms: users.name = Mary |

Displays a list of all virtual machines belonging to users with the user name Mary. |

Events: severity > normal sortby time |

Displays the list of all Events whose severity is higher than Normal, sorted by time. |

1.3.3. Search Auto-Completion

The Administration Portal provides auto-completion to help you create valid and powerful search queries. As you type each part of a search query, a drop-down list of choices for the next part of the search opens below the Search Bar. You can either select from the list and then continue typing/selecting the next part of the search, or ignore the options and continue entering your query manually.

The following table specifies by example how the Administration Portal auto-completion assists in constructing a query:

Hosts: Vms.status = down

| Input | List Items Displayed | Action |

|---|---|---|

h |

|

Select |

Hosts: |

All host properties |

Type v |

Hosts: v |

host properties starting with a |

Select |

Hosts: Vms |

All virtual machine properties |

Type s |

Hosts: Vms.s |

All virtual machine properties beginning with |

Select |

Hosts: Vms.status |

|

Select or type = |

Hosts: Vms.status = |

All status values |

Select or type down |

1.3.4. Search Result Type Options

The result type allows you to search for resources of any of the following types:

-

Vms for a list of virtual machines

-

Host for a list of hosts

-

Pools for a list of pools

-

Template for a list of templates

-

Events for a list of events

-

Users for a list of users

-

Cluster for a list of clusters

-

DataCenter for a list of data centers

-

Storage for a list of storage domains

As each type of resource has a unique set of properties and a set of other resource types that it is associated with, each search type has a set of valid syntax combinations. You can also use the auto-complete feature to create valid queries easily.

1.3.5. Search Criteria

You can specify the search criteria after the colon in the query. The syntax of {criteria} is as follows:

<prop><operator><value>

or

<obj-type><prop><operator><value>

Examples

The following table describes the parts of the syntax:

| Part | Description | Values | Example | Note |

|---|---|---|---|---|

prop |

The property of the searched-for resource. Can also be the property of a resource type (see |

Limit your search to objects with a certain property. For example, search for objects with a status property. |

Status |

N/A |

obj-type |

A resource type that can be associated with the searched-for resource. |

These are system objects, like data centers and virtual machines. |

Users |

N/A |

operator |

Comparison operators. |

= != (not equal) > < >= <= |

N/A |

Value options depend on property. |

Value |

What the expression is being compared to. |

String Integer Ranking Date (formatted according to Regional Settings) |

Jones 256 normal |

|

1.3.6. Search: Multiple Criteria and Wildcards

Wildcards can be used in the <value> part of the syntax for strings. For example, to find all users beginning with m, enter m*.

You can perform a search having two criteria by using the Boolean operators AND and OR. For example:

Vms: users.name = m* AND status = Up

This query returns all running virtual machines for users whose names begin with "m".

Vms: users.name = m* AND tag = "paris-loc"

This query returns all virtual machines tagged with "paris-loc" for users whose names begin with "m".

When two criteria are specified without AND or OR, AND is implied. AND precedes OR, and OR precedes implied AND.

1.3.7. Search: Determining Search Order

You can determine the sort order of the returned information by using sortby. Sort direction (asc for ascending, desc for descending) can be included.

For example:

events: severity > normal sortby time desc

This query returns all Events whose severity is higher than Normal, sorted by time (descending order).

1.3.8. Searching for Data Centers

The following table describes all search options for Data Centers.

| Property (of resource or resource-type) | Type | Description (Reference) |

|---|---|---|

|

Depends on property type |

The property of the clusters associated with the data center. |

|

String |

The name of the data center. |

|

String |

A description of the data center. |

|

String |

The type of data center. |

|

List |

The availability of the data center. |

|

List |

Sorts the returned results by one of the resource properties. |

|

Integer |

The page number of results to display. |

Example

Datacenter: type = nfs and status != up

This example returns a list of data centers with a storage type of NFS and status other than up.

1.3.9. Searching for Clusters

The following table describes all search options for clusters.

| Property (of resource or resource-type) | Type | Description (Reference) |

|---|---|---|

|

Depends on property type |

The property of the data center associated with the cluster. |

|

String |

The data center to which the cluster belongs. |

|

String |

The unique name that identifies the clusters on the network. |

|

String |

The description of the cluster. |

|

String |

True or False indicating the status of the cluster. |

|

List |

Sorts the returned results by one of the resource properties. |

|

Integer |

The page number of results to display. |

Example

Clusters: initialized = true or name = Default

This example returns a list of clusters which are initialized or named Default.

1.3.10. Searching for Hosts

The following table describes all search options for hosts.

| Property (of resource or resource-type) | Type | Description (Reference) |

|---|---|---|

|

Depends on property type |

The property of the virtual machines associated with the host. |

|

Depends on property type |

The property of the templates associated with the host. |

|

Depends on property type |

The property of the events associated with the host. |

|

Depends on property type |

The property of the users associated with the host. |

|

String |

The name of the host. |

|

List |

The availability of the host. |

|

String |

The health status of the host as reported by external systems and plug-ins. |

|

String |

The cluster to which the host belongs. |

|

String |

The unique name that identifies the host on the network. |

|

Integer |

The percent of processing power used. |

|

Integer |

The percentage of memory used. |

|

Integer |

The percentage of network usage. |

|

Integer |

Jobs waiting to be executed in the run-queue per processor, in a given time slice. |

|

Integer |

The version number of the operating system. |

|

Integer |

The number of CPUs on the host. |

|

Integer |

The amount of memory available. |

|

Integer |

The processing speed of the CPU. |

|

String |

The type of CPU. |

|

Integer |

The number of virtual machines currently running. |

|

Integer |

The number of virtual machines currently being migrated. |

|

Integer |

The percentage of committed memory. |

|

String |

The tag assigned to the host. |

|

String |

The type of host. |

|

String |

The data center to which the host belongs. |

|

List |

Sorts the returned results by one of the resource properties. |

|

Integer |

The page number of results to display. |

Example

Hosts: cluster = Default and Vms.os = rhel6

This example returns a list of hosts which are part of the Default cluster and host virtual machines running the Enterprise Linux 6 operating system.

1.3.11. Searching for Networks

The following table describes all search options for networks.

| Property (of resource or resource-type) | Type | Description (Reference) |

|---|---|---|

|

Depends on property type |

The property of the cluster associated with the network. |

|

Depends on property type |

The property of the host associated with the network. |

|

String |

The human readable name that identifies the network. |

|

String |

Keywords or text describing the network, optionally used when creating the network. |

|

Integer |

The VLAN ID of the network. |

|

String |

Whether Spanning Tree Protocol (STP) is enabled or disabled for the network. |

|

Integer |

The maximum transmission unit for the logical network. |

|

String |

Whether the network is only used for virtual machine traffic. |

|

String |

The data center to which the network is attached. |

|

List |

Sorts the returned results by one of the resource properties. |

|

Integer |

The page number of results to display. |

Example

Network: mtu > 1500 and vmnetwork = true

This example returns a list of networks with a maximum transmission unit greater than 1500 bytes, and which are set up for use by only virtual machines.

1.3.12. Searching for Storage

The following table describes all search options for storage.

| Property (of resource or resource-type) | Type | Description (Reference) |

|---|---|---|

|

Depends on property type |

The property of the hosts associated with the storage. |

|

Depends on property type |

The property of the clusters associated with the storage. |

|

String |

The unique name that identifies the storage on the network. |

|

String |

The status of the storage domain. |

|

String |

The health status of the storage domain as reported by external systems and plug-ins. |

|

String |

The data center to which the storage belongs. |

|

String |

The type of the storage. |

|

Integer |

The size (GB) of the free storage. |

|

Integer |

The amount (GB) of the storage that is used. |

|

Integer |

The total amount (GB) of the storage that is available. |

|

Integer |

The amount (GB) of the storage that is committed. |

|

List |

Sorts the returned results by one of the resource properties. |

|

Integer |

The page number of results to display. |

Example

Storage: free_size > 6 GB and total_size < 20 GB

This example returns a list of storage with free storage space greater than 6 GB, or total storage space less than 20 GB.

1.3.13. Searching for Disks

The following table describes all search options for disks.

You can use the Disk Type and Content Type filtering options to reduce the number of displayed virtual disks.

|

| Property (of resource or resource-type) | Type | Description (Reference) |

|---|---|---|

|

Depends on property type |

The property of the data centers associated with the disk. |

|

Depends on property type |

The property of the storage associated with the disk. |

|

String |

The human readable name that identifies the storage on the network. |

|

String |

Keywords or text describing the disk, optionally used when creating the disk. |

|

Integer |

The virtual size of the disk. |

|

Integer |

The size of the disk. |

|

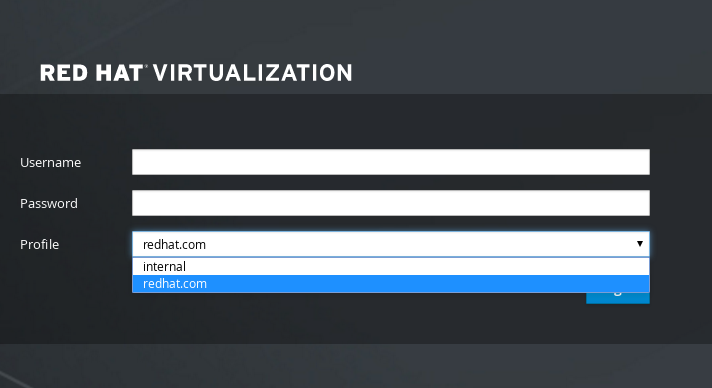

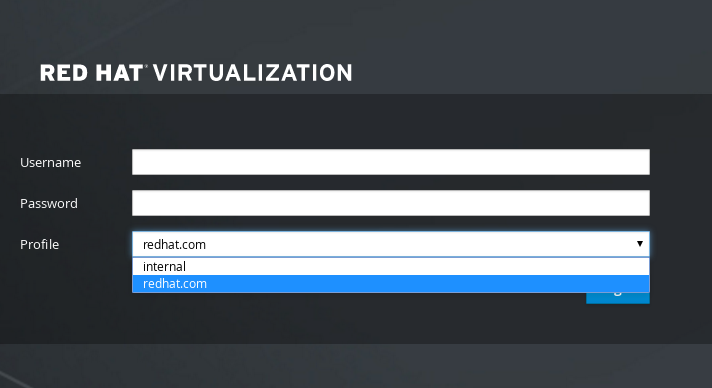

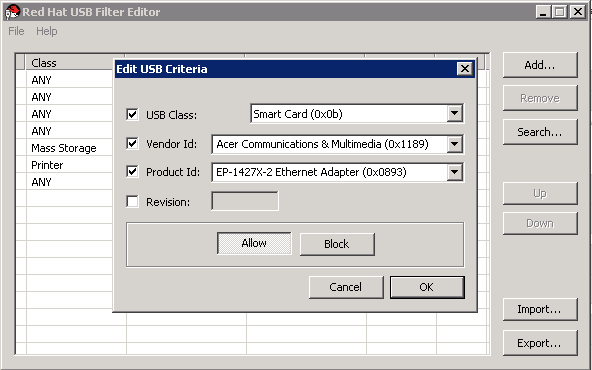

Integer |