Most of them are outdated, but provide historical design context.

They are not user documentation and should not be treated as such.

Documentation is available here.

NUMA and Virtual NUMA

Summary

This feature allow Enterprise customers to provision large guests for their traditional scale-up enterprise workloads and expect low overhead due to visualization.

- Query target host’s NUMA topology

- NUMA bindings of guest resources (vCPUs & memory)

- Virtual NUMA topology

You may also refer to the simple feature page.

Owner

- Name: Jason Liao (JasonLiao), Bruce Shi (BruceShi)

- Email: chuan.liao@hp.com, xiao-lei.shi@hp.com

- IRC: jasonliao, bruceshi @ #ovirt (irc.oftc.net)

Current status

- Target Release: oVirt 3.5

- Status: design

- Last updated: 25 Mar 2014

This is the detailed design page for NUMA and Virtual NUMA

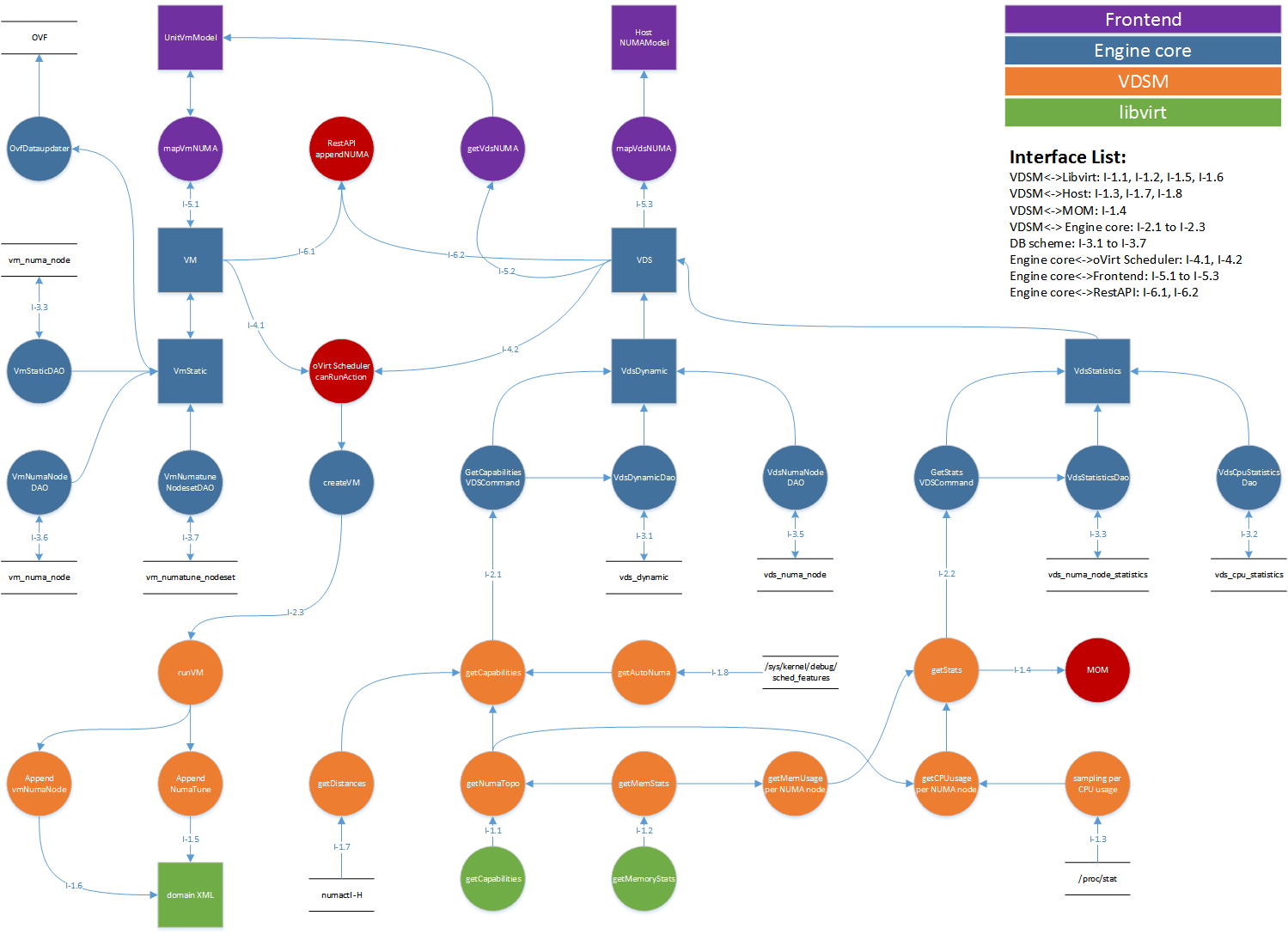

Data flow diagram

Interface & data structure

Interface between VDSM and libvirt

- I-1.1 Host’s NUMA node index and CPU id of each NUMA node

- I-1.2 Host’s NUMA node memory information, include total and free memory

- I-1.5 Configuration of VM’s memory allocation mode and memory comes from which NUMA nodes

- I-1.6 Configuration of VM’s virtual NUMA topology

- I-1.1 Seek the host NUMA nodes information by using

getCapabilitiesAPI in libvirt<capabilities> … <host> ... <topology> <cells num='1'> <cell id='0'> <cpus num='2'> <cpu id='0'/> <cpu id='1'/> </cpus> </cell> </cells> </topology> … </host> … </capabilities> - I-1.2 Seek the host NUMA nodes memory information by using

getMemoryStatsAPI in libvirt, the below is the data format of API returned value{ total: int, free: int } - I-1.5 Create a new function

appendNumaTunein VDSM vm module to write the VM numatune configuration into libvirt domain xml follow the below format<domain> ... <numatune> <memory mode='interleave' nodeset='0-1'/> </numatune> … </domain> - I-1.6 Modify function

appendCpuin VDSM vm module to write the VM virtual NUMA topology configuration into libvirt domain xml follow the below format<cpu> ... <numa> <cell cpus='0-7' memory='10485760'/> <cell cpus='8-15' memory='10485760'/> </numa> ... </cpu>

Interface between VDSM and Host

- I-1.3 Statistics data of each host CPU core which include %usr (%usr+%nice), %sys and %idle.

- I-1.4 Data structure to be provided to MOM component

- I-1.7 NUMA distances capture from command

- I-1.8 Automatic NUMA balancing on host

- I-1.3 Sampling host CPU statistics data in

/proc/stat, the whole data format is showing as below. We will use column 1 to 5 which include user, system, nice and idle CPU handlers to calculate CPU statistics data in engine side$ cat /proc/stat cpu 268492078 16093 132943706 6545294629 19023496 898 138160 0 57789592 cpu0 62042038 3012 52198814 1638619972 2438624 4 12068 0 16721375 cpu1 62779520 2733 25830756 1647361083 6001324 1 34617 0 16341547 cpu2 77892630 5788 32963856 1610093241 8367287 889 80447 0 8205583 cpu3 65777888 4559 21950279 1649220333 2216260 4 11027 0 16521086 - I-1.4 Data structure that provided to MOM component

MOM use the VDSM HypervisorInterface using API.py Global.getCapabilities function to get host NUMA topology data

'autoNumaBalancing': int

'numaNodeDistance': {'<nodeIndex>': [int], ...}

'numaNodes': {'<nodeIndex>': {'cpus': [int], 'totalMemory': 'str'}, …}

using API.py Global.getStats function to get host NUMA statistics data

'numaNodeMemFree': {'<nodeIndex>': {'memFree': 'str', 'memPercent': int}, …}

'cpuStatistics': {'<cpuId>': {'nodeIndex': int, 'cpuSys': 'str', 'cpuIdle': 'str', 'cpuUser': 'str'}, …}

- I-1.7 libivirt API do not support to get NUMA distances information, so we use command

numactlto get the distances information$ numactl -H node distances: node 0 1 0: 10 20 1: 20 10 - I-1.8 In kernels who having Automatic NUMA balancing feature, use command

sysctl -a |grep numa_balancingto check the Automatic NUMA balancing value is turn on or off$ sysctl -a | grep numa_balancing kernel.numa_balancing = 1

Interface between VDSM and engine core

- I-2.1 Report host support automatic NUMA balancing situation, NUMA node distances, NUMA nodes information, include NUMA node index, cpu ids and total memory, from VDSM to engine core

- I-2.2 Report host NUMA nodes memory information (free memory and used memory percentage) and each cpu statistics (system, idle, user cpu percentage) from VDSM to engine core

- I-2.3 Configuration of set VM’s numatune and virtual NUMA topology from engine core to VDSM

- I-2.1 Transfer data format of host NUMA nodes information

'autoNumaBalancing': int 'numaDistances': {'<nodeIndex>': [int], ...} 'numaNodes': {'<nodeIndex>': {'cpus': [int], 'totalMemory': 'str'}, …} - I-2.2 Transfer data format of host CPU statistics and NUMA nodes memory information

'numaNodeMemFree': {'<nodeIndex>': {'memFree': 'str', 'memPercent': int}, …} 'cpuStatistics': {'<cpuId>': {'numaNodeIndex': int, 'cpuSys': 'str', 'cpuIdle': 'str', 'cpuUser': 'str'}, …} - I-2.3 Transfer data format of set VM numatune and virtual NUMA topology

'numaTune': {'mode': 'str', 'nodeset': 'str'} 'guestNumaNodes': [{'cpus': 'str', 'memory': 'str'}, …]

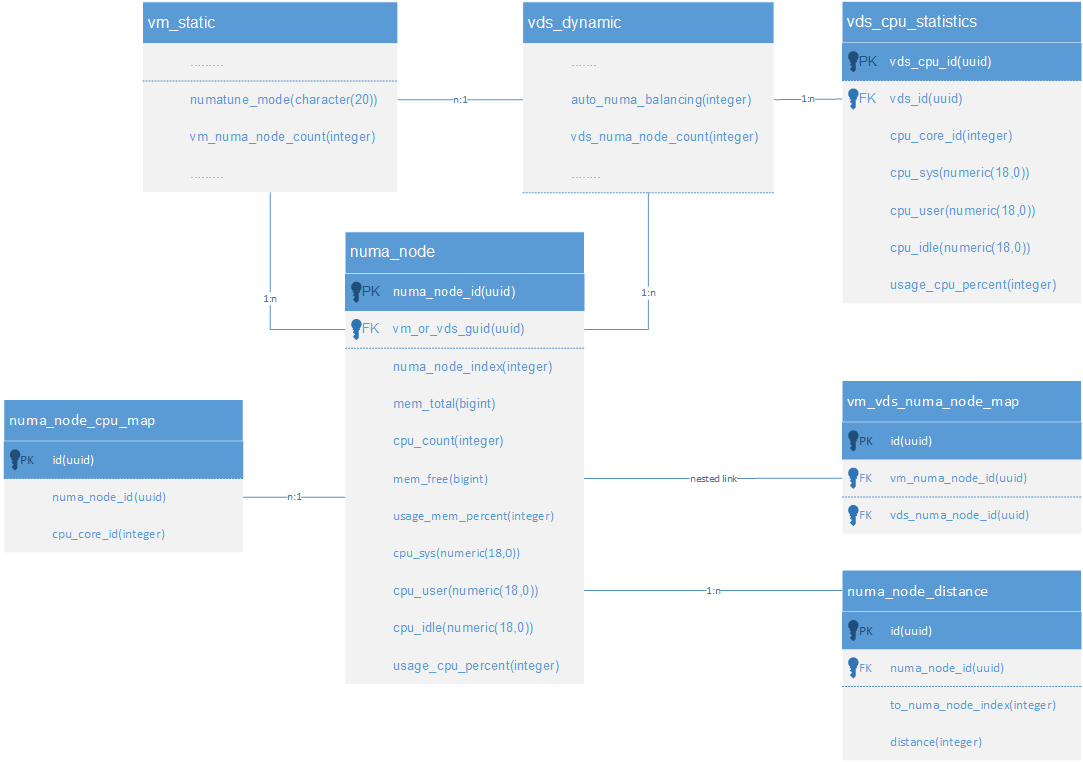

Interface between engine core and database (schema)

- I-3.1 Schema modification of

vds_dynamictable to include host’s NUMA node count and automatic NUMA balancing status. - I-3.2 Add table

vds_cpu_statisticsto include host cpu statistics information (system, user, idle cpu time and used cpu percentage). - I-3.3 Schema modification of

vm_statictable to include numatune mode configuration and virtual NUMA node count. - I-3.4 Add table

numa_nodeto include host/vm NUMA node information (node index, total memory, cpu count of each node) and statistics information (system, user, idle cpu time, used cpu percentage, free memory and used memory percentage). - I-3.5 Add table

vm_vds_numa_node_mapto include the configuration of vm virtual NUMA nodes pinning to host NUMA nodes (this is a nested relationship table, store the map relations between vm NUMA nodes and host NUMA nodes which are all in table numa_node). - I-3.6 Add table

numa_node_cpu_mapto include the cpu information that each host/vm NUMA node contains. - I-3.7 Add table

numa_node_distanceto include the distance information between the NUMA nodes.

The above interfaces are defined with database design diagram

- Related database scripts change:

- Add

numa_sp.sqlto include the store procedures which handle the operations in tablenuma_node,numa_node_cpu_map,vm_vds_numa_node_mapandnuma_node_distance. It will provide the store procedures to insert, update and delete data and kinds of query functions. - Modify

vds_sp.sqlto add some store procedures which handle the operations in tablevds_cpu_statistics, including insert, update, delete and kinds of query functions. - Modify the function of

InsertVdsDynamic,UpdateVdsDynamicinvds_sp.sqlto add new columnsauto_numa_banlancingandvds_numa_node_count. - Modify the function of

InsertVmStatic,UpdateVmStaticinvms_sp.sqlto add two new columnsnumatune_modeandvm_numa_node_count. - Modify

create_views.sqlto add new columnsnumatune_modeandvm_numa_node_countin viewvmsandvms_with_tags; add new columnsauto_numa_banlancingandvds_numa_node_countin viewvdsandvds_with_tags. - Modify

create_views.sqlto add new views, including viewvds_numa_node_viewwhich joinsvds_dynamicandnuma_node; viewvm_numa_node_viewwhich joinsvm_staticandnuma_node. - Modify

upgrade/post_upgrade/0010_add_object_column_white_list_table.sqlto add new columnsauto_numa_banlancingandvds_numa_node_count. - Add one script under

upgrade/to create tables -numa_node,vds_cpu_statistics,vm_vds_numa_node_map,numa_node_cpu_map,numa_node_distanceand add columns in tablevds_dynamicandvm_static. - Create the following indexes:

- Index on column

vm_or_vds_guidof tablenuma_node - Index on column

vds_idof tablevds_cpu_statistics - Index on column

numa_node_idof tablenuma_node_cpu_map - Index on column

numa_node_idof tablenuma_node_distance - Indexes on each of the columns

vm_numa_node_idandvds_numa_node_idof tablevm_vds_numa_node_map

- Index on column

- Add

- Related DAO change:

- Add

NumaNodeDAOand related implemention to provide data save, update, delete and kinds of queries in tablenuma_node,numa_node_cpu_map,vm_vds_numa_node_mapandnuma_node_distance. AddNumaNodeDAOTestforNumaNodeDAOmeanwhile. - Add

VdsCpuStatisticsDaoand related implementation to provide data save, update, delete and kinds of queries in tablevds_cpu_statistics. AddVdsCpuStatisticsDAOTestforVdsCpuStatisticsDAOmeanwhile. - Modify

VdsDynamicDAODbFacadeImplandVdsDAODbFacadeImplto add the map of new columnsauto_numa_banlancingandvds_numa_node_count. RunVdsDynamicDAOTestto verify the modification. - Modify

VmStaticDAODbFacadeImplandVmDAODbFacadeImplto add the map of new columnsnumatune_modeandnuma_node_count. RunVmStaticDAOTestto verify the modification.

- Add

- Related search engine change

Currently, we plan to provide below search functions about NUMA feature, each field support the numeric relation of “>”, “<”, “>=”, “<=”, “=”, “!=”.

- Search hosts with the below NUMA related fields:

- NUMA node number

- NUMA node cpu count

- NUMA node total memory

- NUMA node memory usage

- NUMA node cpu usage

- Search vms with the below NUMA related fields:

- NUMA tune mode

- Virtual NUMA node number

- Virtual NUMA node vcpu count

- Virtual NUMA node total memory

NUMA tune mode support enum value relation, the others support the numeric relation.

We will do the following modifications:

- Modify

org.ovirt.engine.core.searchbackend.SearchObjectsto add new entry NUMANODES. - Add

org.ovirt.engine.core.searchbackend.NumaNodeConditionFieldAutoCompleterto provide NUMA node related filters auto completion; - Modify

org.ovirt.engine.core.searchbackend.SearchObjectAutoCompleterto add new joins, one is HOST joins NUMANODES on vds_id, the other is VM joins NUMANODES on vm_guid. - Add new entries in entitySearchInfo accordingly. NUMANODES will use new added view vds_numa_node_view and view vm_numa_node_view.

- Modify

org.ovirt.engine.core.searchbackend.VdsCrossRefAutoCompleterto add auto complete entry NUMANODES.

- Cascade-delete

- When user remove a virtual NUMA node, the related rows in table

numa_node_cpu_map,vm_vds_numa_node_map,numa_node_distance(maybe in future, currently no distance information for virtual NUMA node) andnuma_nodeshould be removed meanwhile. - When user remove a vm, all the virtual NUMA nodes of this vm should be removed, follow above item to do the cascade-delete.

- When user remove a host, the related rows in table

numa_node_cpu_map,vm_vds_numa_node_map,numa_node_distance,numa_nodeandvds_cpu_statisticsshould be removed meanwhile.

- When user remove a virtual NUMA node, the related rows in table

Interface and data structure in engine core

- Entities

VDShas manyVdsNumaNodeobjects in dynamic data (collect from vds capatibility)VdsNumaNodeis core entity for host NUMA topology, it links one statistics objectVdsNumaNodeStatisticswhich contains some real-time data (free memory, NUMA node cpu usage etc.)VMhas manyVmNumaNodeobject in dynamic data (configured by user)VmNumaNodeis core entity for VM NUMA topology.NumaTuneModeis the memory tune mode (configured by user).VdsNumaNodehas one-to-many relationship withVmNumaNode.VdsNumaNode.cpuIdslinks withCpuStatistics.cpuIdto take a look inside NUMA node each CPU usage

- Action & Query

GetVdsNumaNodeByVdsId, GetVmNumaNodeByVmId, GetVmNumaNodeByVdsNumaNodeId, GetCpuStatsByVdsIduse same parametersIdQueryParametersAddVmNumaNode, UpdateVmNuamNode, RemoveVmNuamNodeuse same parametersVmNumaNodeParametersto manage Virtual NUMA node in VMSetNumaTuneModeuse parametersNumaTuneModeParametersto set the NUMA tuning mode for VMGetVdsNumaNodeByVdsIdwill returnList<VdsNumaNode>GetVmNumaNodeByVmId, GetVmNumaNodeByVdsNumaNodeIdwill returnList<VmNumaNode>GetVmNumaNodeByVdsNumaNodeIdwill query theVmNumaNodes under theVdsNumaNodeGetCpuStatsByVdsIdwill returnList<CpuStatistics>- When

VmNumaNodeParameters.vdsNumaNodeIdis set to null, theVmNumaNodeis unsigned.

Interface and data structure in ovirt scheduler

Add NUMA filter and weight module to oVirt’s scheduler, and add those to all cluster policies (inc. user defined).

- NUMA Filter

- Fetches the (scheduled) VM virutal NUMA nodes.

- Fetches all virtual NUMA nodes topology ( CPU count, total memory ).

- Fetches all hosts NUMA nodes topology ( CPU count, total memory ).

- Remove all hosts that doesn’t meet the matched NUMA nodes topology

- for positive, host NUMA node’s CPU count > virtual NUMA node’s CPU count

- for positive, host NUMA node’s total memory > virtual NUMA node’s total memory

- NUMA Weight Module

- Fetches the (scheduled) VM virutal NUMA nodes.

- Fetches all virtual NUMA nodes topology ( CPU count, total memory, NUMA distance ).

- Fetches all hosts NUMA nodes topology and statistics ( CPU usage, free memory ).

- Score the hosts according to each NUMA nodes score

- for positive, in case a VM of the group is running on a certain host, give all other hosts a higher weight.

- for positive, give the host higher weight if the host NUMA node’s CPU usage use up.

- for positive, give the host higher weight if the host NUMA node’s memory use up.

Scheduler generate virtual NUMA topology To be continue …

Interface and data structure in restful API

host NUMA sub-collection

/api/hosts/{host:id}/numanodes/

- Supported actions - GET returns a list of host NUMA nodes. (using query GetVdsNumaNodeByVdsId)

host NUMA resource

/api/hosts/{host:id}/numanodes/{numa:id}

- Supported actions

- GET returns a specific NUMA node information: CPU list, total memory, map of distance with other nodes. (using VdsNumaNode properties)

host NUMA statistics

/api/hosts/{host:id}/numanodes/{numa:id}/statistics

- Supported actions

- GET returns a specific NUMA node statistics data: CPU usage, free memory. (using VdsNumaNode property NumaNodeStatistics)

vm virtual NUMA sub-collection

/api/vms/{vm:id}/numanodes

- Supported actions:

- GET returns a list of VM virtual NUMA nodes. (using query GetVmNumaNodeByVmId)

- POST attach a new virtual NUMA node on VM. (using action AddVmNumaNode)

vm virtual NUMA resource

/api/vms/{vm:id}/numanodes/{vnuma:id}

- Supported actions:

- GET returns a specific virtual NUMA node information, CPU list, total memory, pin to host NUMA nodes. (using VmNumaNode properties)

- *PUT a virtual NUMA node configured on the VM. (using action UpdateVmNumaNode)

- DELETE removes a virtual NUMA node from the VM. (using action DeleteVmNumaNode)